Artificial general intelligence, or AGI, has people each intrigued and fearful.

As a number one researcher in the sphere, last July, OpenAI introduced the concept of superalignment via a team created to check scientific and technical breakthroughs to guide and ultimately control AI systems which might be rather more capable than humans. OpenAI refers to this level of AI as superintelligence.

Last week, this team unveiled the primary results of an effort to supervise more powerful AI with less powerful models. While promising, the trouble showed mixed results and brings to light several more questions on the long run of AI and the flexibility of humans actually to manage such advanced machine intelligence.

On this Breaking Evaluation, we share the outcomes of OpenAI’s superalignment research and what it means for the long run of AI. We further probe ongoing questions on OpenAI’s unconventional structure, which we proceed to consider is misaligned with its conflicting objectives of each protecting humanity and getting cash. We’ll also poke at a nuanced change in OpenAI’s characterization of its relationship with Microsoft. Finally, we’ll share some data that shows the magnitude of OpenAI’s lead available in the market and propose some possible solutions to the structural problem faced by the industry.

OpenAI’s superalignment team unveils its first public research

With little fanfare, OpenAI unveiled the outcomes of recent research that describes a way to supervise more powerful AI models with a less capable large language model. The paper is named Weak-to-Strong Generalization: Eliciting Strong Capabilities with Weak Supervision.

The essential concept introduced is superintelligent AI will likely be so vastly superior to humans that traditional supervision techniques akin to reinforcement learning from human feedback, or RLHF, won’t scale. This “super AI,” the pondering goes, will likely be so sophisticated that humans won’t give you the option to understand its output. Fairly, the team got down to test whether less capable GPT-2 models can supervise more capable GPT-4 models as a proxy for a supervision approach that would keep superintelligent systems from going rogue.

The superalignment team at OpenAI is led by Ilya Sutskever and Jan Leike. Sutskever’s name is highlighted on this graphic because much of the chatter on Twitter after this paper was released suggested that he was not cited as a contributor to the research. Perhaps his name was left off initially given the recent OpenAI board meltdown after which added later. Or perhaps the dozen or so commenters were mistaken, but that’s unlikely. At any rate he’s clearly involved.

Can David AI control a superintelligent goliath?

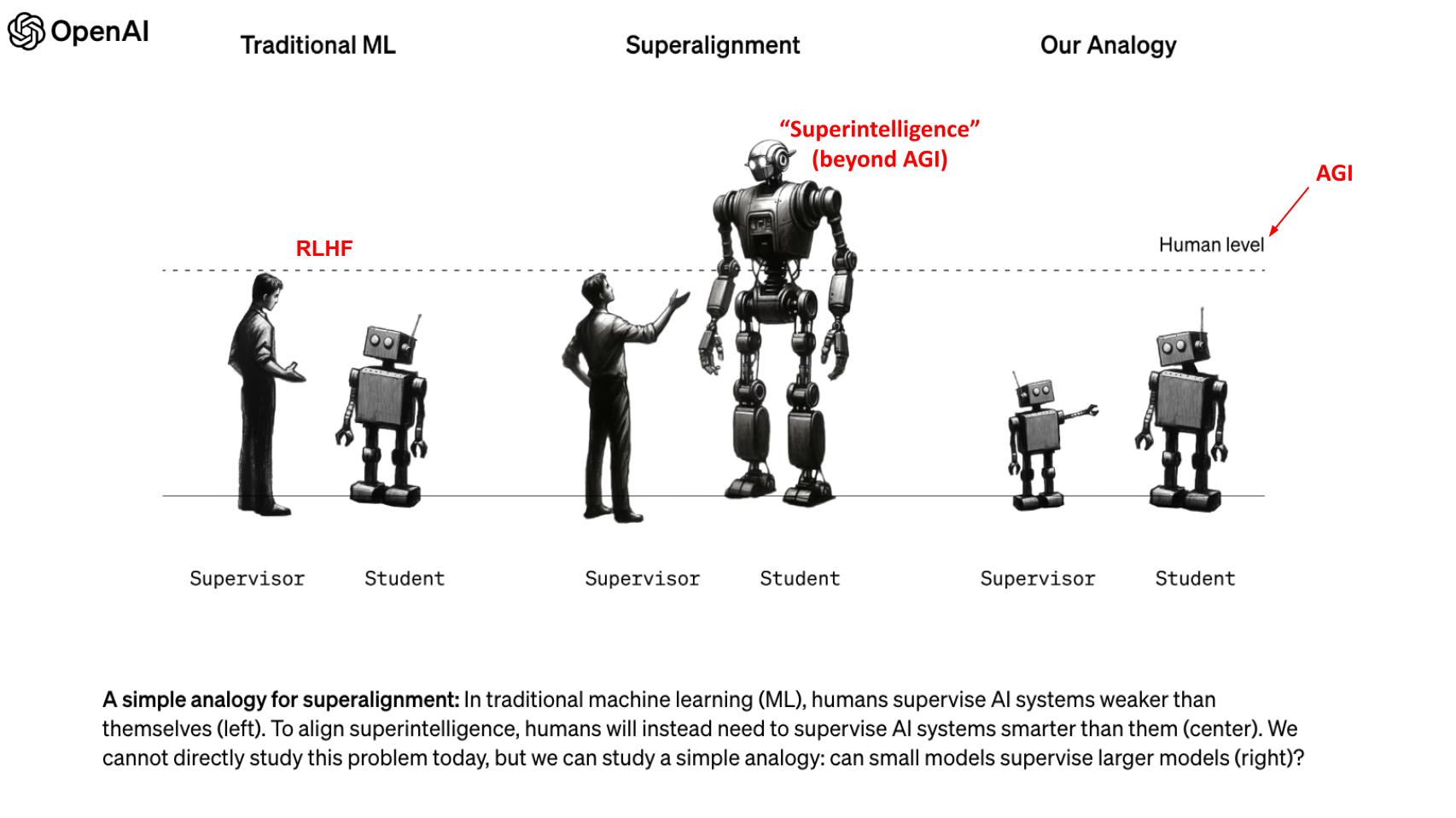

This graphic has been circulated across the web so perhaps you’ve seen it. We’ve annotated in red so as to add some additional color. The graphic shows that traditional machine learning models involve RLHF where the outputs of a question are presented to humans to rate them. That feedback is then pumped back into the training regimen to enhance model results.

Superalignment, shown in the center, would ostensibly involve a human attempting to unsuccessfully supervise a way more intelligent AI, which presents a failure mode. For instance, the super AI could generate hundreds of thousands of lines of code that mere humans wouldn’t give you the option to grasp. The issue, after all, is that superintelligence doesn’t exist, so it could possibly’t be tested. But as a proxy, the third scenario shown here is that a less capable model — GPT-2 on this case — was set as much as supervise a more advanced GPT-4 model.

For reference, we pin AGI on the human level of intelligence, recognizing that definitions do vary depending on whom you speak with. Regardless, the team tested this idea to see if the smarter AI would learn bad habits from the less capable AI and develop into “dumber,” or would the outcomes close the gap between the less capable AI’s capabilities and a known ground truth set of labels that represent correct answers?

The methodology was thoughtful. The team tested several scenarios across natural language processing, chess puzzles and reward modeling, which is a way to attain responses to a prompt as a reinforcement signal to iterate toward a desired end result. The outcomes were mixed, nevertheless. The team measured the degree to which the performance of a GPT-4 model supervised by GPT-2 closed the gap on known ground truth labels. They found that the more capable model supervised by the less capable AI performed 20% to 70% higher than GPT-2 on the language tasks but did less well on other tests.

The researchers are encouraged that GPT-4 outdid its supervisor and consider this shows promising potential. However the smarter model had greater capabilities that weren’t unlocked by the teacher calling into query the ability of a less capable AI to manage a wiser model.

In desirous about this problem, one can’t help but recall the scene from the movie “Good Will Hunting”:

Is there a ‘supervision tax’ in AI safety?

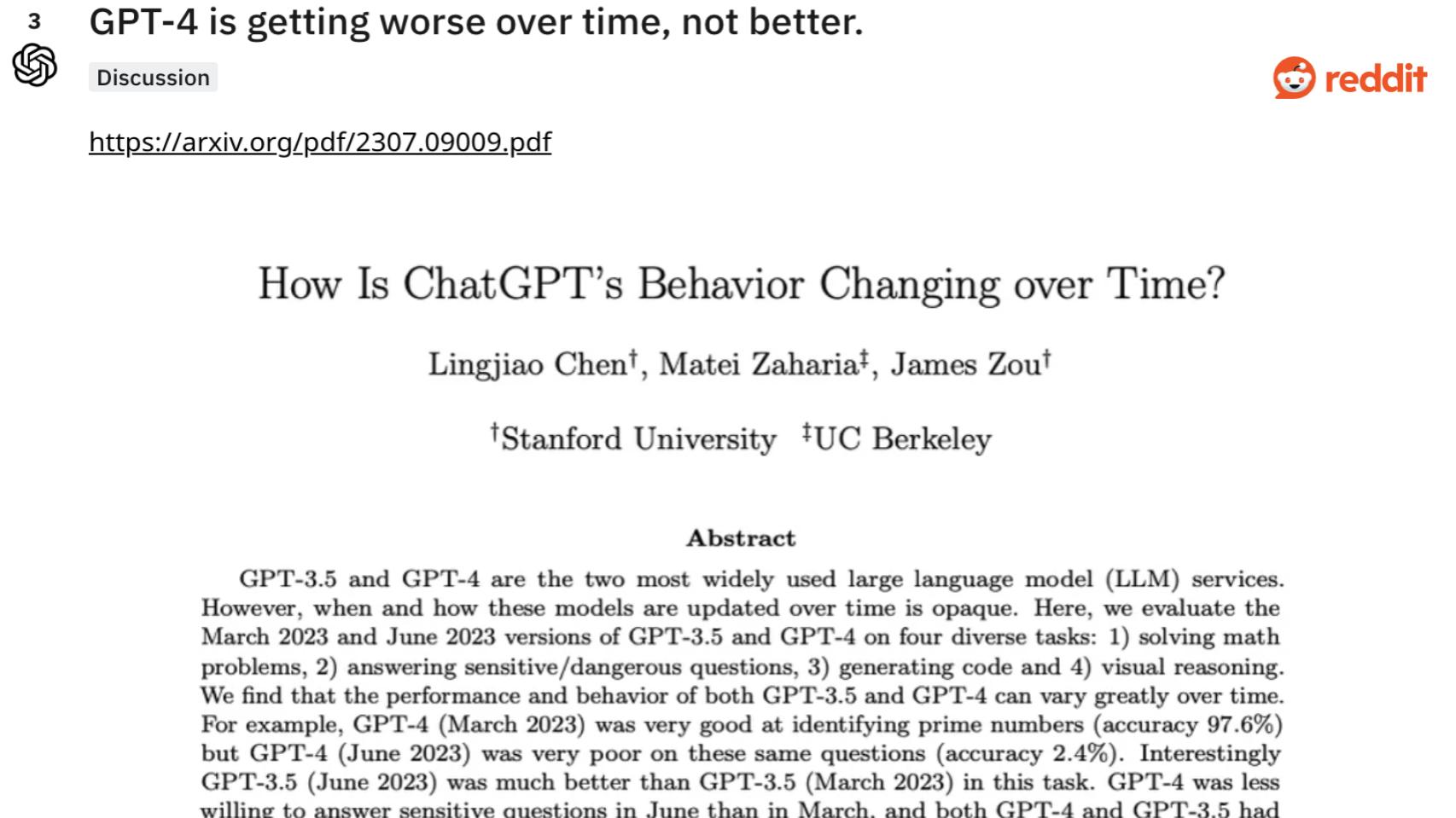

There are several threads on social and specifically on Reddit, lamenting the frustration with GPT-4 getting “dumber.” A research paper by Stanford and UC Berkeley published this summer points out the drift in accuracy over time. Theories have circulated as to why, starting from architectural challenges and memory issues, and a number of the hottest citing the necessity for so-called guardrails has dumbed down GPT-4 over time.

Customers of ChatGPT’s for pay service have been particularly vocal about paying for a service that’s degrading in quality over time. Nonetheless, a lot of these claims are anecdotal. It’s unclear to what extent the standard of GPT-4 is basically degrading, because it’s difficult to trace such a fast-moving goal. Furthermore, there are a lot of examples where GPT-4 is improving, akin to in remembering prompts and fewer hallucinations.

Regardless, the purpose is that this controversy further underscores many alignment challenges between government and personal industry, for-profit versus nonprofit objectives, AI safety, and regulation conflicting with innovation and progress. Immediately the market is just like the Wild West, with plenty of hype and diverging opinions.

OpenAI changes the language regarding Microsoft’s ownership

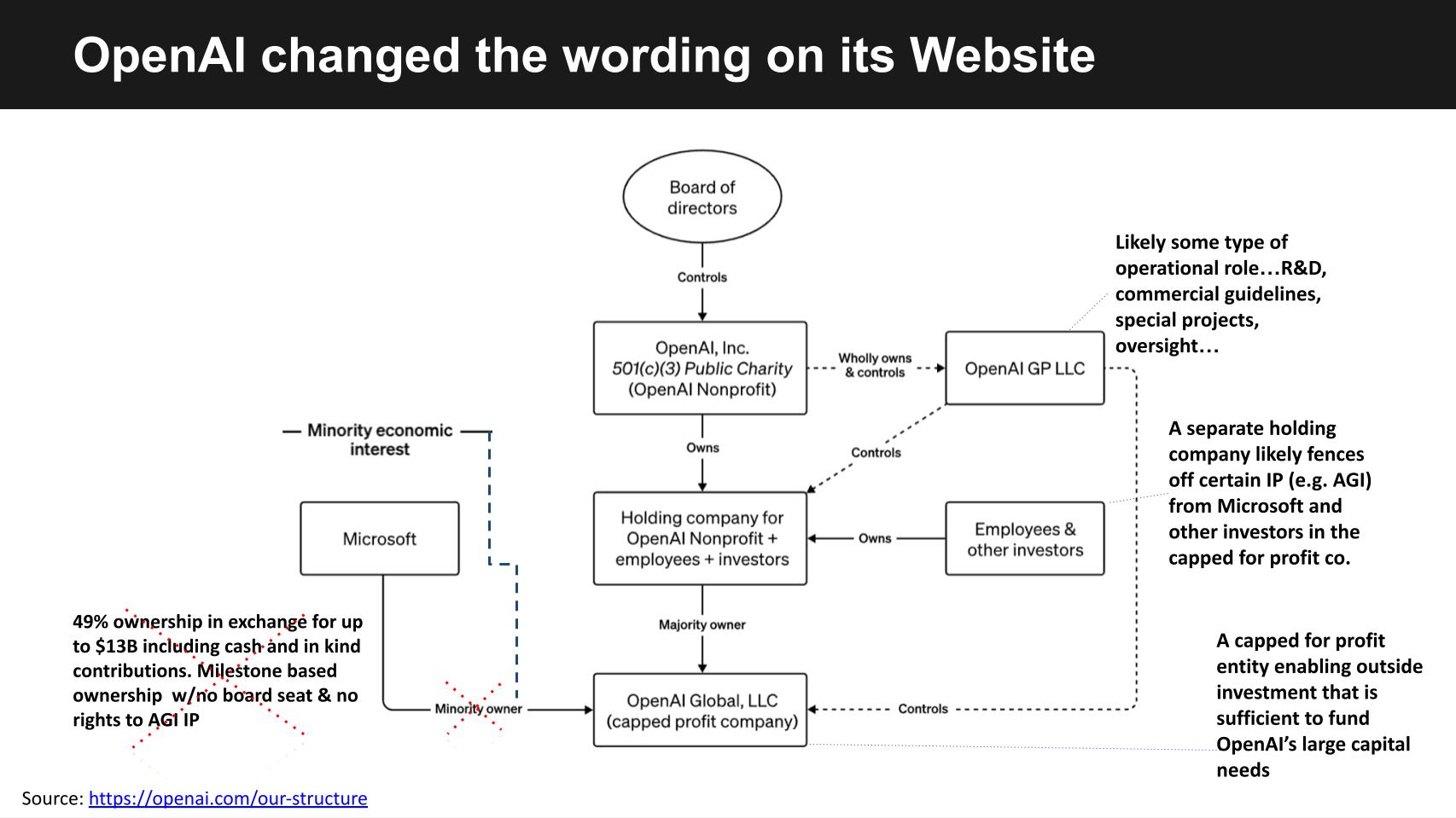

In a post last month, covering the OpenAI governance failure we showed this graphic from OpenAI’s Site. As we discussed this past week with John Furrier on theCUBE Pod, the best way through which OpenAI and Microsoft are characterizing their relationship has quietly modified.

To review briefly, the graphic shows the convoluted and in our view, misaligned structure of OpenAI. It’s controlled by a 501(c)(3) non-profit public charity with a mission to do good AI for humanity. That board controls an LLC which provides oversight and in addition controls a holding company owned by employees and investors akin to Khosla Ventures, Sequoia and others. This holding company owns a majority of one other LLC, which is a capped profit company.

Previously on OpenAI’s Site, Microsoft was cited as a “Minority owner.” That language has now modified to reflect Microsoft’s “Minority economic interest,” which we consider is a 49% stake within the capped profits of the LLC. Now, quite obviously this variation was precipitated by the U.K. and U.S. governments looking into the connection between Microsoft and OpenAI, which is fraught with misalignment as we saw with the firing and rehiring of Chief Executive Sam Altman, and the following board observer seat consolation that OpenAI made for Microsoft.

The partial answer in our view is to create two separate boards and governance structures — one to control the nonprofit and a separate board to administer the for-profit business of OpenAI. But that alone won’t solve the superalignment problem, assuming superhuman intelligence is a given, which it shouldn’t be necessarily.

The AI market is bifurcated

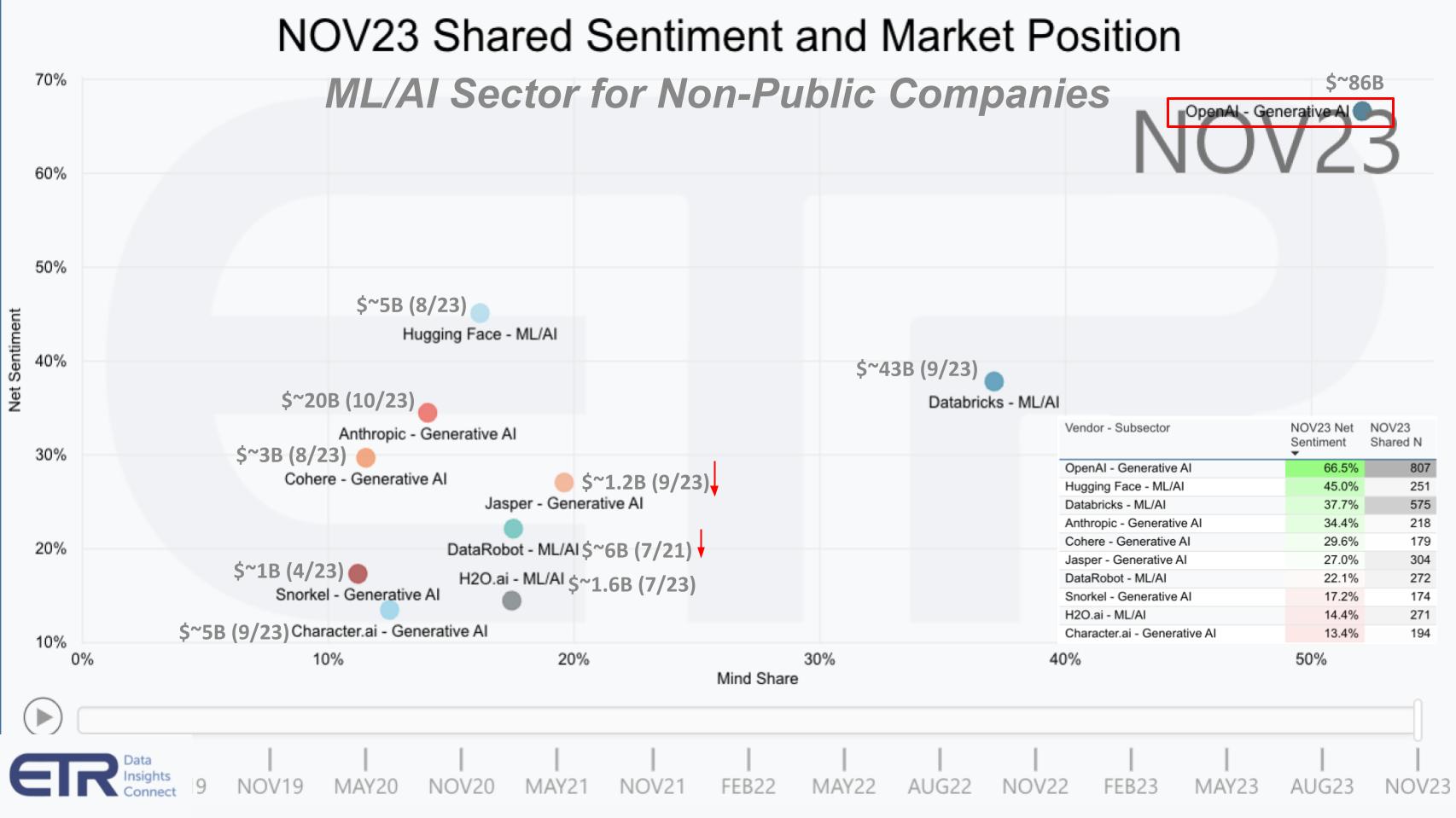

To underscore the wide schisms within the AI marketplace, let’s take a have a look at this Enterprise Technology Research data from the Emerging Technology Survey, ETS, which measures the market sentiment and mindshare amongst privately held corporations. Here we’ve isolated on the ML/AI sector which comprises traditional AI plus LLM players, as cited within the annotations. We’ve also added essentially the most recent market valuation data for every of the firms. The chart shows Net Sentiment on the vertical axis which is a measure of intent to have interaction, and mindshare on the horizontal axis which measure awareness of the corporate.

The primary point is OpenAI’s position is literally off the charts in each dimensions. Its lead with respect to those metrics is overwhelming, as is its $86 billion market cap. On paper it’s more useful than Snowflake Inc. (not shown here) and Databricks Inc. with a reported $43 billion valuation. Each Snowflake and Databricks are extremely successful and established firms with 1000’s of consumers.

Hugging Face is high up on the vertical axis – consider it because the GitHub for AI model engineers. As of this summer, its valuation was at $5 billion. Anthropic PBC is outstanding and, with its investments from Amazon Web Services Inc. and Google LLC, it touts a recent $20 billion valuation, while Cohere this summer reportedly had a $3 billion valuation.

Jasper AI is a preferred marketing platform that’s seeing downward pressure on its valuation because ChatGPT is disruptive to its value proposition at a much lower cost. DataRobot Inc. at the height of the tech bubble had a $6 billion valuation, but after some controversies around selling insider shares, its value has declined. You can too see here H2O.ai Inc. and Snorkel AI Inc. with unicorn-like valuations and Character.ai, which is a chatbot generative AI platform and recently was reported having a $5 billion valuation.

So you possibly can see the gap between OpenAI and the pack. As well you possibly can clearly see that emergent competitors to OpenAI are commanding higher valuations than the normal machine learning players. Generally our view is AI generally and generative AI specifically are a tide that can lift all boats. But some boats will give you the option to ride the wave more successfully than others and thus far, despite its governance challenges, OpenAI and Microsoft have been in the very best position.

Key questions on superintelligence

There are a lot of questions around AGI and now super AI as this recent parlance of superintelligence and superalignment emerge. First, is that this vision aspirational or it is really technically feasible? Experts akin to John Roese, chief technology officer of Dell, have said all of the pieces are there for AGI to develop into a reality, there’s just not enough economically feasible compute today and the standard of information continues to be lacking. But from a technological standpoint, he agrees with OpenAI that it’s coming.

If that’s the case, how will the objectives of superalignment – aka control – affect innovation and what are the implications of the industry leader having a governance structure that’s controlled by a nonprofit board? Can its objectives truly win out over the profit motives of a complete industry? We are likely to doubt it and the reinstatement of Altman as CEO underscores who’s going to win that battle. Altman was the large winner in all that drama — not Microsoft.

So to us, the structure of OpenAI has to vary. The corporate needs to be split in two with separate boards for the nonprofit and the industrial arm. And if the mission of OpenAI is really is to develop and direct artificial intelligence in ways in which profit humanity as an entire, then why not split the businesses in two and open up the governance structure of the nonprofit to other players, including OpenAI competitors and governments?

On the difficulty of superintelligence, beyond AGI, what happens when AI becomes autodidactic and becomes a real self-learning system? Can that basically be controlled by less capable AI? The conclusion of OpenAI researchers is that humans clearly won’t give you the option to manage it.

But before you get too scared, there are those skeptics who feel that we’re still far-off from AGI, let alone superintelligence. Hence point No. 5 here: Is that this a case where Zeno’s paradox applies? Zeno’s paradox, chances are you’ll remember from highschool math classes, states that any moving object must reach halfway on a course before it reaches the top; and since there are an infinite variety of halfway points, a moving object never reaches the top in a finite time.

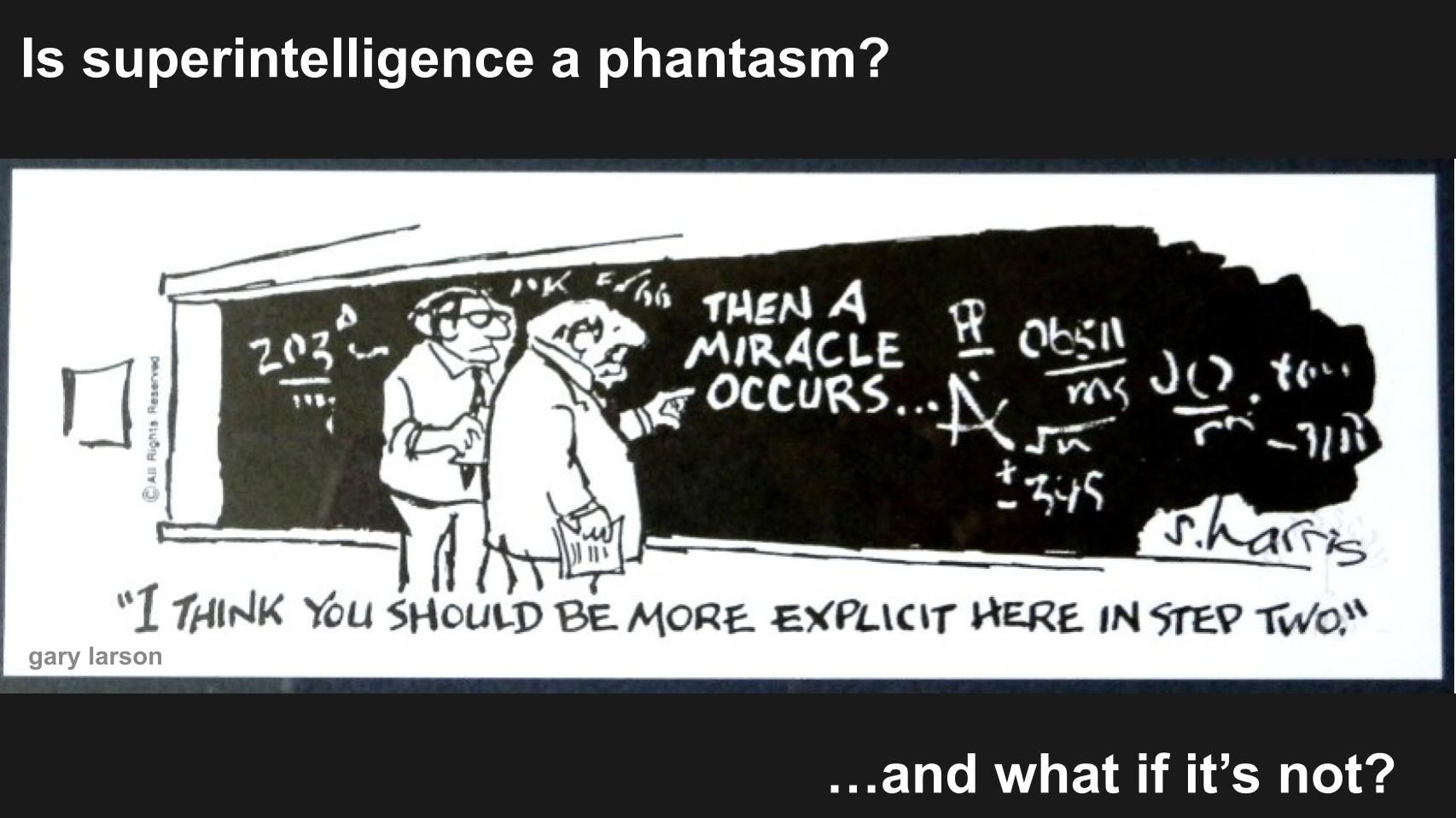

Is superintelligence a fantasy?

This graphic sums up the opinions of the skeptics. It shows a super-complicated equation with a step in the maths that claims, “Then a Miracle Occurs.” It’s type of where we’re with AGI and superintelligence… like waiting for Godot.

We don’t often use the phrase “time will tell” in these segments. As analysts, we prefer to be more precise and opinionated with data to back those opinions. But on this case we simply don’t know.

But let’s leave you with a thought experiment from Arun Subramaniyan put forth at Supercloud 4 this past October. We asked him for his thoughts on AGI and the identical applies for superintelligence. His premise was assume for a minute that AGI is here. Wouldn’t the AI know that we as humans can be wary of the AI and take a look at to manage it? So wouldn’t the smart AI act in such a way as to cover its true intentions? Ilya Sutskever has stated this can be a concern.

The purpose being, if super AI is a lot smarter than humans, then it is going to give you the option to outsmart us easily and control us versus us controlling it. And that’s the very best case for creating structures that allow the motives of those concerned about AI safety to pursue a mission independent of a profit-driven agenda. Because a profit motive will almost all the time win over an agenda that sets out to easily do the precise thing.

Keep up a correspondence

Because of Alex Myerson and Ken Shifman on production, podcasts and media workflows for Breaking Evaluation. Special due to Kristen Martin and Cheryl Knight, who help us keep our community informed and get the word out, and to Rob Hof, our editor in chief at SiliconANGLE.

Remember we publish each week on Wikibon and SiliconANGLE. These episodes are all available as podcasts wherever you listen.

Email david.vellante@siliconangle.com, DM @dvellante on Twitter and comment on our LinkedIn posts.

Also, take a look at this ETR Tutorial we created, which explains the spending methodology in additional detail. Note: ETR is a separate company from Wikibon and SiliconANGLE. For those who would really like to cite or republish any of the corporate’s data, or inquire about its services, please contact ETR at legal@etr.ai.

Here’s the total video evaluation:

All statements made regarding corporations or securities are strictly beliefs, points of view and opinions held by SiliconANGLE Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements aren’t recommendations by these individuals to purchase, sell or hold any security. The content presented doesn’t constitute investment advice and shouldn’t be used as the idea for any investment decision. You and only you’re liable for your investment decisions.

Disclosure: Lots of the corporations cited in Breaking Evaluation are sponsors of theCUBE and/or clients of Wikibon. None of those firms or other corporations have any editorial control over or advanced viewing of what’s published in Breaking Evaluation.

Image: InvisibleWizard/Adobe Stock

Your vote of support is vital to us and it helps us keep the content FREE.

One click below supports our mission to offer free, deep, and relevant content.

Join our community on YouTube

Join the community that features greater than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and plenty of more luminaries and experts.

THANK YOU