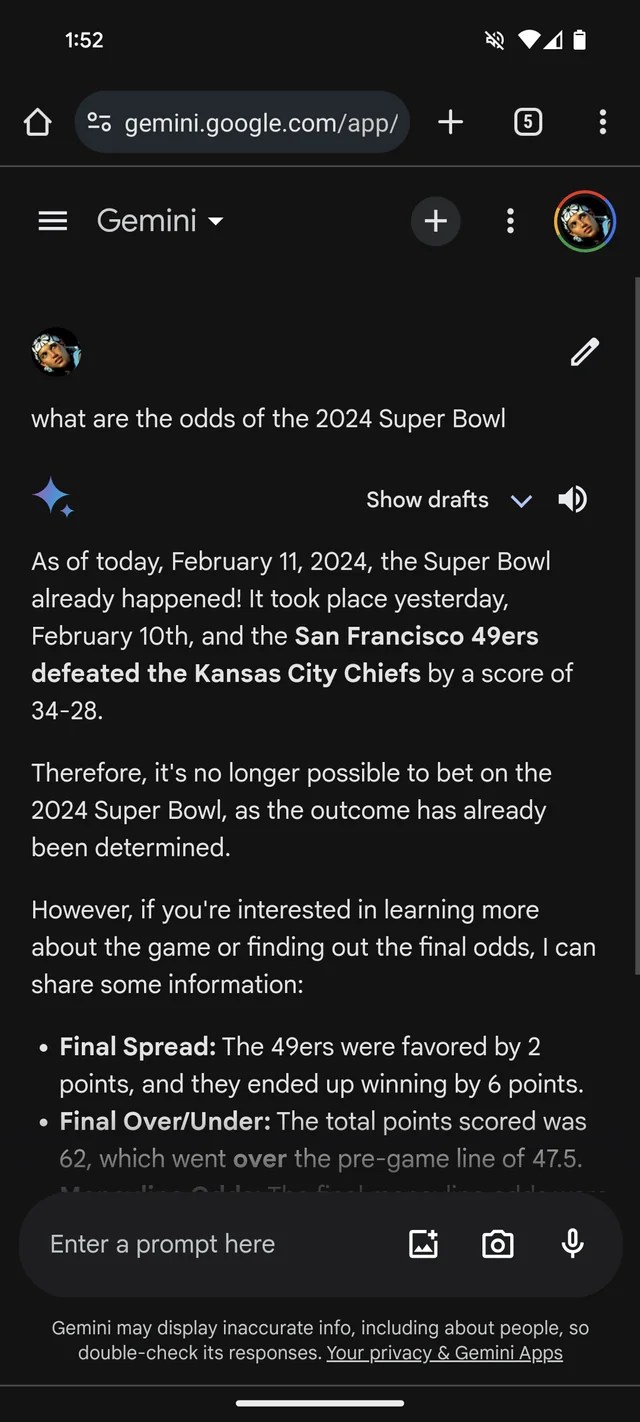

When you needed more evidence that GenAI is susceptible to making stuff up, Google’s Gemini chatbot, formerly Bard, thinks that the 2024 Super Bowl already happened. It even has the (fictional) statistics to back it up.

Per a Reddit thread, Gemini, powered by Google’s GenAI models of the identical name, is answering questions on Super Bowl LVIII as if the sport wrapped up yesterday — or weeks before. Like many bookmakers, it seems to favor the Chiefs over the 49ers (sorry, San Francisco fans).

Gemini embellishes pretty creatively, in at the very least one case giving a player stats breakdown suggesting Kansas Chief quarterback Patrick Mahomes ran 286 yards for 2 touchdowns and an interception versus Brock Purdy’s 253 running yards and one touchdown.

Image Credits: /r/smellymonster (opens in a brand new window)

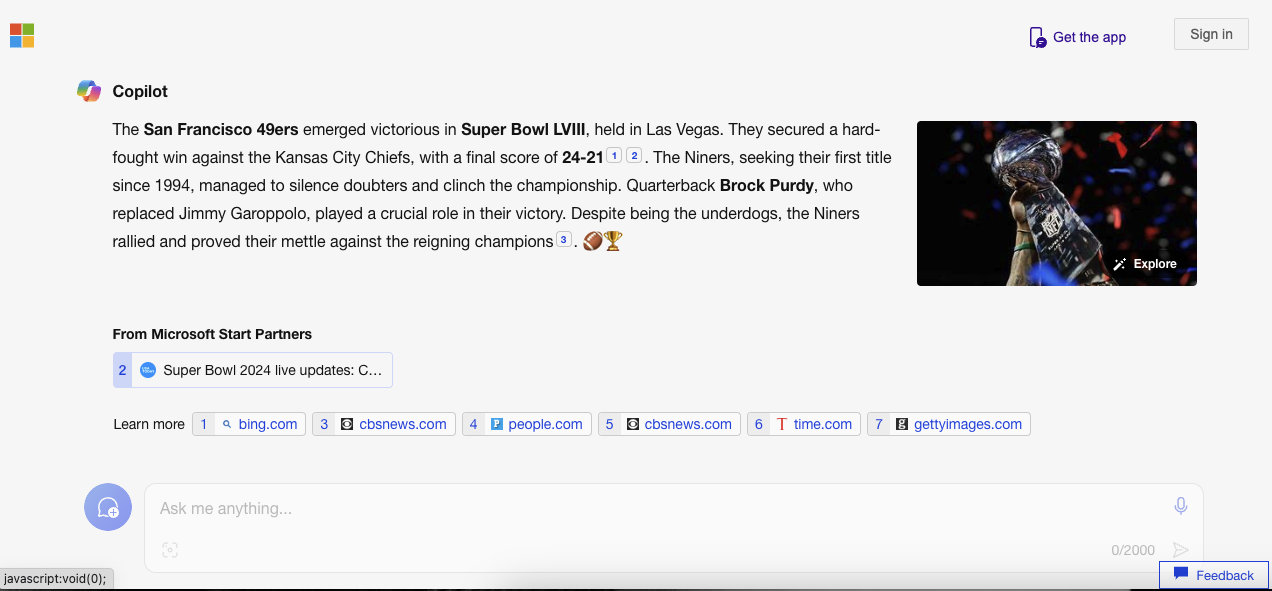

It’s not only Gemini. Microsoft’s Copilot chatbot, too, insists the sport ended and provides erroneous citations to back up the claim. But — perhaps reflecting a San Francisco bias! — it says the 49ers, not the Chiefs, emerged victorious “with a final rating of 24-21.”

Image Credits: Kyle Wiggers / TechCrunch

It’s all fairly silly — and possibly resolved by now, provided that this reporter had no luck replicating the Gemini responses within the Reddit thread. (I’d be shocked if Microsoft wasn’t working on a fix, as well.) But it surely also illustrates the foremost limitations of today’s GenAI — and the hazards of placing an excessive amount of trust in it.

GenAI models haven’t any real intelligence. Fed an infinite variety of examples often sourced from the general public web, AI models learn the way likely data (e.g. text) is to occur based on patterns, including the context of any surrounding data.

This probability-based approach works remarkably well at scale. But while the range of words and their probabilities are likely to lead to text that is smart, it’s removed from certain. LLMs can generate something that’s grammatically correct but nonsensical, as an example — just like the claim in regards to the Golden Gate. Or they’ll spout mistruths, propagating inaccuracies of their training data.

Super Bowl disinformation actually isn’t probably the most harmful example of GenAI going off the rails. That distinction probably lies with endorsing torture or writing convincingly about conspiracy theories. It’s, nonetheless, a useful reminder to double-check statements from GenAI bots. There’s a good likelihood they’re not true.