AI-powered video generation is a hot market on the back of OpenAI’s releasing Sora model last month. Two Deepmind alums Yishu Miao and Ziyu Wang have publicly released their video generation tool Haiper with its own AI model underneath.

Miao, who was previously working at TikTok within the Global Trust & Safety team, and Wang, who has worked as a research scientist for each Deepmind and Google began working on the corporate in 2021 and formally incorporated it in 2022.

The pair has expertise in machine learning and commenced working on the issue of 3D reconstruction using neural networks. After training on video data, Miao mentioned to TechCrunch on a call that they came upon that video generation was a more fascinating problem than 3D reconstruction. That’s why Haiper ended up specializing in video generation roughly six months ago.

Haiper has raised $13.8 million in a seed round led by Octopus Ventures with participation from 5Y Capital. Before that, angels like Geoffrey Hinton and Nando de Freitas helped the corporate raise a $5.4 million pre-seed round in April 2022.

Video generation service

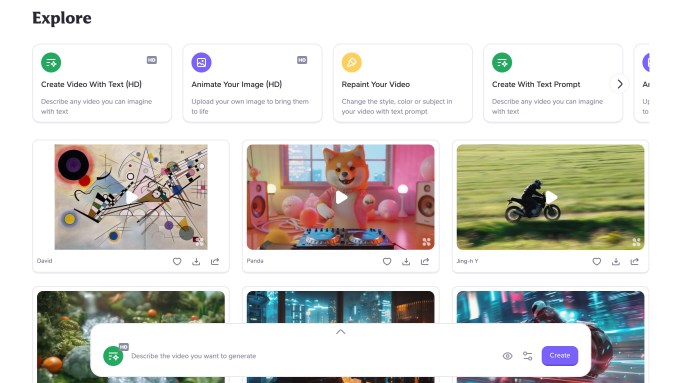

Users can go to Haiper’s site and begin generating videos without cost by typing in text prompts. Nevertheless, there are specific limitations. You may only generate a two-second long HD video and barely lower-quality video of as much as 4 seconds.

Image Credits: Haiper

The location also has features like animating your image and repainting your video in a special style. Plus, the corporate is working to introduce capabilities like the power to increase a video.

Miao said that the corporate goals to maintain these features free in an effort to construct a community. He noted that it’s “too early” within the startup’s journey to take into consideration constructing a subscription product around video generation. Nevertheless, it has collaborated with firms like JD.com to explore industrial use cases.

We used considered one of the unique Sora prompts to generate a sample video: “Several giant wooly mammoths approach treading through a snowy meadow, their long wooly fur frivolously blows within the wind as they walk, snow-covered trees and dramatic snow-capped mountains in the space, mid-afternoon light with wispy clouds and a sun high in the space creates a warm glow, the low camera view is stunning capturing the massive furry mammal with beautiful photography, depth of field”

Constructing a core video model

While Haiper is currently specializing in its consumer-facing website, it wants to construct a core video generation model that could possibly be offered to others. The corporate hasn’t made any details concerning the model public.

Miao said that it has privately reached out to a bunch of developers to try its closed API. He expects that developer feedback may be very essential with the corporate iterating on the model rapidly. Haiper has also considered open-sourcing its models down the road to let people explore different use cases.

The CEO believes that currently, it’s essential to unravel the uncanny valley problem — a phenomenon that evokes eerie feelings when people see AI-generated human-like figures — in video generation.

“We are usually not working in solving problems in content and elegance area, but we are attempting work on fundamental issues like how AI-generated humans look while walking or snow falling,” he said.

The corporate currently has around 20 employees and is actively hiring for multiple roles across engineering and marketing.

Competition ahead

OpenAI’s recently released Sora might be the most well-liked competitor for Haiper in the mean time. Nevertheless, there are other players like Google and Nvidia-backed Runway, which has raised over $230 million in funding. Google and Meta even have their very own video generation models. Last 12 months, Stability AI announced Stable Diffusion Video model in research preview.

Rebecca Hunt, a partner at Octopus Enterprise believes that in the subsequent three years, Haiper could have to construct a powerful video generation model to realize differentiation on this market.

“There are realistically only a handful of individuals positioned to realize this; that is considered one of the explanations we desired to back the Haiper team. Once the models get to some extent that transcends the uncanny valley and reflects the true world and all its physics there will likely be a period where the applications are infinite,” she told TechCrunch over email.

While investors are looking to speculate in AI-powered video generation startups, in addition they think the technology still has a whole lot of room for improvement.

“It seems like AI video is at GPT-2 level. We’ve made big strides within the last 12 months, but there’s still a strategy to go before on a regular basis consumers are using these products each day. When will the ‘ChatGPT moment’ arrive for video?” a16z’s Justine Moore wrote last 12 months.