It has been a wildly busy week in AI news due to OpenAI, including a controversial blog post from CEO Sam Altman, the wide rollout of Advanced Voice Mode, 5GW data center rumors, major staff shake-ups, and dramatic restructuring plans.

But the remainder of the AI world doesn’t march to the identical beat, doing its own thing and churning out latest AI models and research by the minute. Here’s a roundup of another notable AI news from the past week.

Google Gemini updates

On Tuesday, Google announced updates to its Gemini model lineup, including the discharge of two latest production-ready models that iterate on past releases: Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. The corporate reported improvements in overall quality, with notable gains in math, long context handling, and vision tasks. Google claims a 7 percent increase in performance on the MMLU-Pro benchmark and a 20 percent improvement in math-related tasks. But as , for those who’ve been reading Ars Technica for some time, AI typically benchmarks aren’t as useful as we would love them to be.

Together with model upgrades, Google introduced substantial price reductions for Gemini 1.5 Pro, cutting input token costs by 64 percent and output token costs by 52 percent for prompts under 128,000 tokens. As AI researcher Simon Willison noted on his blog, “For comparison, GPT-4o is currently $5/[million tokens] input and $15/m output and Claude 3.5 Sonnet is $3/m input and $15/m output. Gemini 1.5 Pro was already the most cost effective of the frontier models and now it’s even cheaper.”

Google also increased rate limits, with Gemini 1.5 Flash now supporting 2,000 requests per minute and Gemini 1.5 Pro handling 1,000 requests per minute. Google reports that the most recent models offer twice the output speed and 3 times lower latency in comparison with previous versions. These changes may make it easier and less expensive for developers to construct applications with Gemini than before.

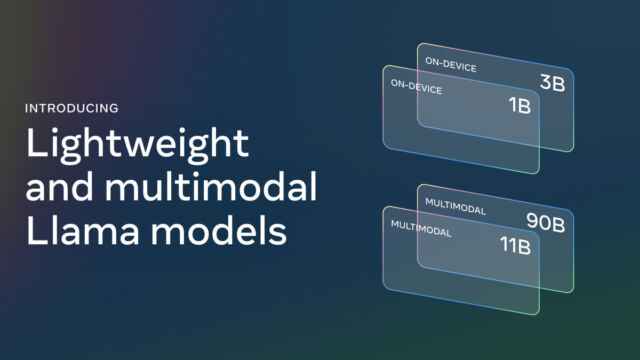

Meta launches Llama 3.2

On Wednesday, Meta announced the discharge of Llama 3.2, a major update to its open-weights AI model lineup that we’ve covered extensively previously. The brand new release includes vision-capable large language models (LLMs) in 11 billion and 90B parameter sizes, in addition to lightweight text-only models of 1B and 3B parameters designed for edge and mobile devices. Meta claims the vision models are competitive with leading closed-source models on image recognition and visual understanding tasks, while the smaller models reportedly outperform similar-sized competitors on various text-based tasks.

Willison did some experiments with a number of the smaller 3.2 models and reported impressive results for the models’ size. AI researcher Ethan Mollick showed off running Llama 3.2 on his iPhone using an app called PocketPal.

Meta also introduced the primary official “Llama Stack” distributions, created to simplify development and deployment across different environments. As with previous releases, Meta is making the models available without spending a dime download, with license restrictions. The brand new models support long context windows of as much as 128,000 tokens.

Google’s AlphaChip AI quickens chip design

On Thursday, Google DeepMind announced what appears to be a major advancement in AI-driven electronic chip design, AlphaChip. It began as a research project in 2020 and is now a reinforcement learning method for designing chip layouts. Google has reportedly used AlphaChip to create “superhuman chip layouts” within the last three generations of its Tensor Processing Units (TPUs), that are chips just like GPUs designed to speed up AI operations. Google claims AlphaChip can generate high-quality chip layouts in hours, in comparison with weeks or months of human effort. (Reportedly, Nvidia has also been using AI to assist design its chips.)

Notably, Google also released a pre-trained checkpoint of AlphaChip on GitHub, sharing the model weights with the general public. The corporate reported that AlphaChip’s impact has already prolonged beyond Google, with chip design firms like MediaTek adopting and constructing on the technology for his or her chips. In accordance with Google, AlphaChip has sparked a brand new line of research in AI for chip design, potentially optimizing every stage of the chip design cycle from computer architecture to manufacturing.

That wasn’t all the things that happened, but those are some major highlights. With the AI industry showing no signs of slowing down in the intervening time, we’ll see how next week goes.