Investors have poured north of $30 billion into independent foundation model players, including OpenAI, Anthropic PBC, Cohere Inc. and Mistral AI in addition to others. OpenAI itself has raised greater than $20 billion. To maintain pace, each Meta Platforms Inc. and xAI Corp. could have to spend comparable amounts.

In our view, the stampeding herd of enormous language model investors is blindly focused on the false grail of so-called artificial general intelligence, or AGI. We imagine the best value capture exists in what we’re calling “Enterprise AGI,” metaphorically represented by the proprietary data estates and managerial knowhow locked inside firms equivalent to JPMorgan Chase & Co. and virtually every enterprise.

Generalized LLMs won’t have open access to the specialized data, tools and processes obligatory to harness proprietary intelligence. As such, we imagine private enterprises will capture nearly all of value within the race for AI leadership.

On this Breaking Evaluation, we dig deep into the economics of foundation models and we analyze a chance that few have fully assessed — specifically, what we’re calling Enterprise AGI and the untapped opportunities that exist inside enterprises. While many firms discuss proprietary data because the linchpin of this chance, only a few in our view have thought through the true missing pieces and gaps that we hope to explain for you today.

The false grail of AGI

Let’s start with the catalyst for the AI wave we’re currently riding. Below we show OpenAI Chief Executive Sam Altman. He’s often related to the drive to create AGI. We’re using a definition of AGI where the AI can perform any economically useful function higher than humans can. We’re not referring to superintelligence or Ray Kurzweil’s idea that our consciousness will probably be stored and perpetually survivable, relatively we’re talking about machines doing business work higher than humans.

In the center we show a scene from “Indiana Jones and the Last Crusade,” where the character drinks from a golden chalice, believing it to be the Holy Grail. Within the context of AGI, the Holy Grail represents the last word achievement in AI — the true AGI that so many are chasing.

The rightmost image is from the identical movie, showing the person after he drinks from the unsuitable grail. The meme suggests that while the pursuit of AGI (the Holy Grail) is appealing, its outcomes are perhaps illusory.

Indy was more humble in his quest

Indy didn’t go for the chalice that he believed belonged to a king. He, as an archaeologist, searched for something way more prosaic — as he said, “the cup of a carpenter. ”

Similarly, AGI within the enterprise may really be the pursuit of something way more commonplace than this intergalactic AGI that we were talking about within the last slide. It’s the power steadily to learn increasingly more of the white-collar work processes of the firm.

And as a substitute of 1 all-intelligent AGI, it’s really a swarm of modestly intelligent agents that may collectively augment human white-collar work. So relatively than one AI to rule all of them, which many individuals agree might not be likely scenario, we’re pondering of something different.

Imagine a world with many hundreds of reliable white-collar employee agents

As a substitute, we envision “employee bee” AIs that may perform mundane tasks more efficiently than humans and, over time, change into increasingly adept at more advanced tasks.

Nvidia CEO Jensen Huang recently said something that resonated with us:

Nvidia today has 32,000 employees. And I’m hoping that Nvidia someday will probably be a 50,000-employee company with 100 million AI assistants in each group. We’ll have an entire directory of AIs which are just generally good at doing things… and we’ll even have AIs which are really specialized and expert. We’ll be one large worker base…. Some will probably be digital, some biological. – Jensen Huang on the BG2 Podcast

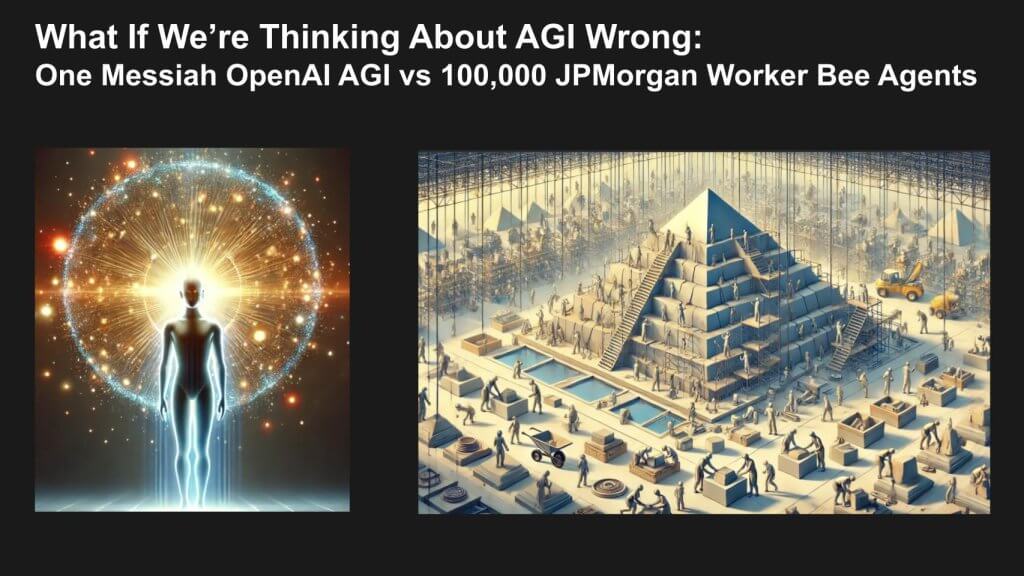

The massive distinction we’re attempting to get to on this research is to know more fully the difference between creating an all-knowing AGI and deploying swarms of employee agents.

There are frontier model corporations which are attempting to create what we’re referring to because the “Messiah AGI,” a God-like intelligence that’s itself smarter than collective biological intelligence. After which there may be the concept of 100,000 employee bees, each trained and specialized to do one and even a number of tasks quite well. The messiah AGI is attempting to make a system with the intelligence to perform all of the tasks that run a contemporary enterprise. Against this, the more humble goal of the employee bee AGI’s is to learn from and augment their human supervisors, and make them way more productive.

There’s a giant distinction between making a messiah AGI and making 100,000 employee bees productive. And our premise today is the info, processes, knowhow and tooling to construct the latter isn’t generally accessible to foundation models trained on the web. Even with synthetic data generation, there are critical missing pieces, which we’ll discuss below.

Comparing a number of leading LLM players

Let’s take a take a look at the present Enterprise Technology Research data with respect to LLMs and generative AI players.

This XY graph shows spending momentum or Net Rating on the vertical axis and Overlap or penetration in the info set on the horizontal plane. OpenAI and Microsoft Corp. lead in momentum and penetration, while the gap in AI between Google LLC and Amazon Web Services Inc. continues to shut. Databricks Inc. and Snowflake Inc. are coming at this from a foundation of enterprise AI, while Meta’s and Anthropic’s positions are directly pointed at one another, albeit with different strategies. IBM Corp. and Oracle Corp. are clearly focused on the enterprise. After which we show a mixture of legacy AI corporations clustered in a pack.

On this picture, Microsoft, AWS, Databricks, Snowflake, IBM and Oracle are firmly within the enterprise AI camp, as is the pack, while Google, Meta, Anthropic and OpenAI are battling it out in the muse model race.

A final point that we would like to emphasize is that constructing standalone agents on we’re calling Messiah AGI foundation models will probably be effective for easy groups of concepts and applications. But to make agents within the enterprise really work, we’re going to wish a base and tooling to construct swarms of agents. That’s vastly more sophisticated and different from the tools to construct single agents.

We’ll dig into this point at the tip of this note.

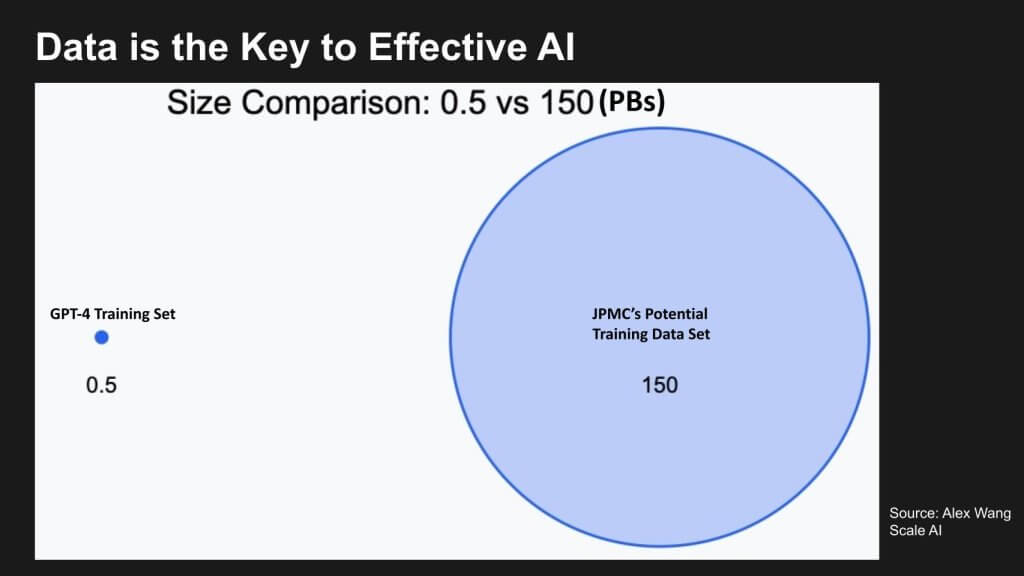

Why Jamie Dimon is Sam Altman’s biggest competitor

Let’s get to the guts of the matter here: Enterprise AGI and the unique data and process advantage firms possess. Below is a graphic from Alex Wang of Scale AI Inc. that shows that GPT-4 was trained on a half-petabyte of knowledge. Meanwhile, JPMorgan chase, whose CEO is Jamie Dimon, is sitting on a mountain of knowledge that roughly is 150 petabytes in size. Now, more just isn’t necessarily higher, so this might be misleading. But the purpose is that JPMC’s data estate inherently accommodates knowhow that just isn’t in the general public domain. In other words, this proprietary knowledge is restricted to JPMC’s business and is the important thing ingredient to its competitive advantage.

The muse models that we’ve been talking about are usually not hugely differentiated. They’re all built on the identical algorithmic architecture deriving from the transformer. All of them progress in size, which we’ll explain. They usually all use the identical compute infrastructure for training. What differentiates them is the info.

Now, it’s not an easy conclusion to say that because JPMorgan has 150 petabytes and GPT-4 was trained on lower than 1 petabyte that each one that data JPMorgan has can go into training agents. Nevertheless it’s a proxy for the undeniable fact that there may be a lot proprietary data locked away inside JPMorgan and in every enterprise that the muse model corporations won’t ever get their hands on.

In our view, this data is hugely essential because that is what you utilize to coach the AI agents. It’s data that agents will learn from including by observing human employees and capturing their thought processes. And far of that data just isn’t even in that training data set. That’s tacit knowledge currently.

The AI corporations call this “reasoning traces” since it captures a thought process. It helps you train a model more effectively than input-output matchings. The LLM model corporations won’t ever give you the option to coach the general public foundation models on these reasoning traces which are core to the knowhow and operation of those firms.

That’s why the swarm of workflow agents, that are really specialized motion models, collectively have the power to learn and embody all that management knowhow and outperform Sam’s foundation model. Again, we are saying collectively. So the 100,000 agents as a swarm, or the million agents as Jensen referred to them, it’s the collective intelligence of those who outperforms the singular intelligence in a frontier foundation model.

The internecine economics of foundation model constructing

The muse model vendors are battling it out, chasing what we’re calling a false grail; and as we said in our opening, the stakes are insanely high.

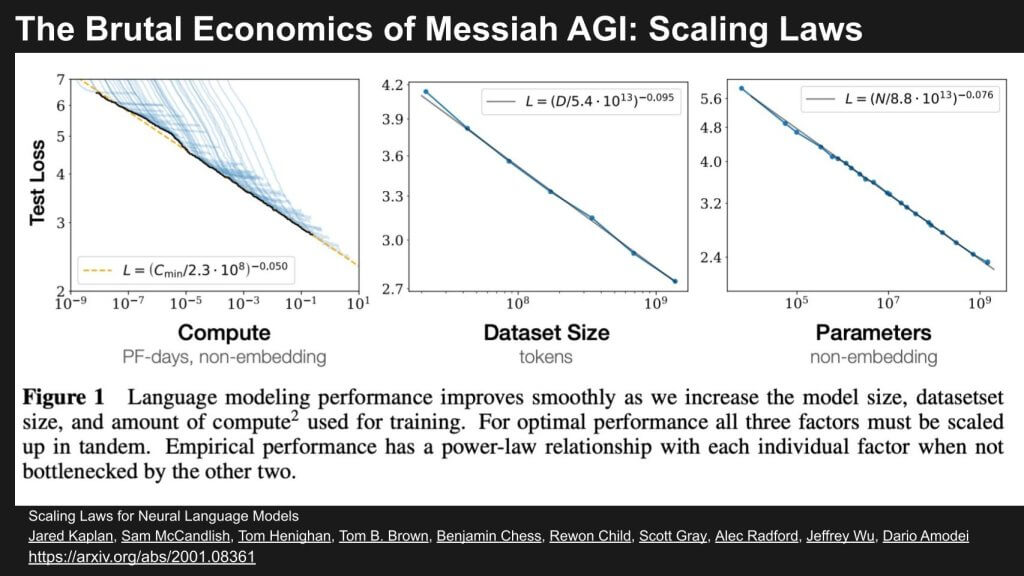

The graphic above illustrates the connection between test loss and three key aspects — compute, dataset size and parameters. All three are critical to scaling up language models effectively. Each panel represents how test loss changes with a rise in one in all these aspects, while the opposite aspects are held constant.

- Compute (left panel): The graph plots test loss against compute measured in PF-days (petaflop-days), showing a declining trend in test loss as compute increases. The connection follows an influence law, indicating that as compute grows, test loss decreases, but at a progressively slower rate. The dashed line represents an empirical formula for loss, with a scaling exponent of roughly -0.050. This implies returns diminish when reducing test loss solely by increasing compute without scaling the opposite aspects proportionately.

- Dataset size (middle panel): This graph shows the impact of dataset size on test loss, with the x-axis representing tokens. Because the variety of tokens grows, the test loss again declines in line with an influence law with a scaling exponent of about -0.095. This suggests that expanding dataset size contributes to improved model performance, but, much like compute, there are diminishing returns when dataset size alone is scaled up without corresponding increases in compute and the variety of parameters.

- Parameters (right panel): The third panel shows test loss relative to the variety of parameters within the model. Increasing the variety of parameters ends in a discount in test loss, following a power-law relationship with a scaling exponent of about -0.076. Which means adding parameters enhances performance, yet the profit plateaus if dataset size and compute aren’t scaled in tandem.

Bottom line: The info tells us that language model performance improves easily as we scale up compute, dataset size, and parameters, but provided that these aspects are scaled in harmony. But staying on the frontier is brutally competitive and expensive. Continuing with our metaphor, Jamie Dimon doesn’t must take part in the competitive race. Reasonably, he advantages from it and applies innovations in AI to his proprietary data. [Note: JPMC has invested in and provided lending support to OpenAI.]

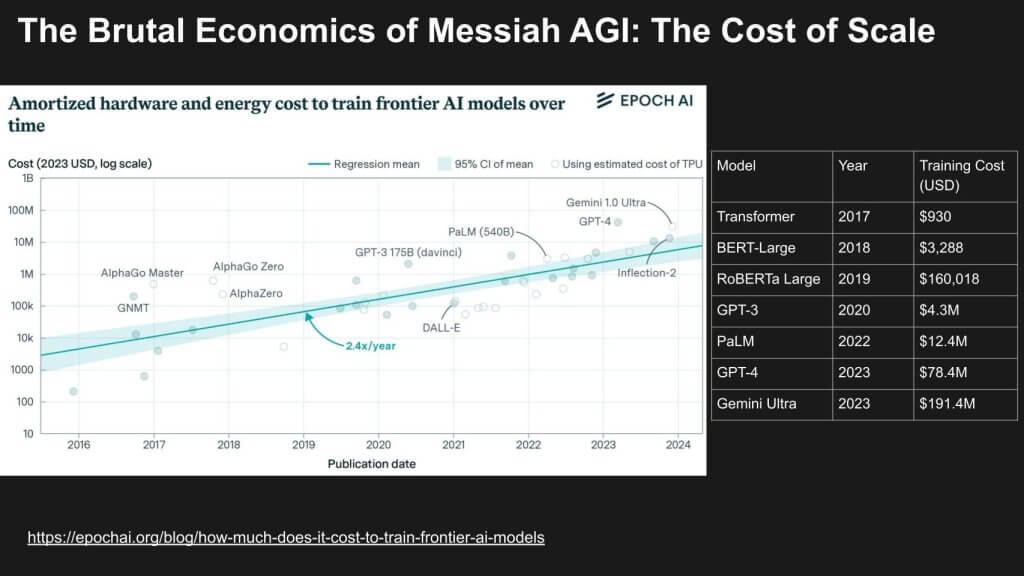

More salt in the associated fee wound

The previous data shows the brutal economics of cost of scale. Each generation of model has to extend in size and compute data set size and parameter count. And these must increase together to remain competitive. But if you increase all of them together, if you happen to look within the right-hand table above, Google’s Gemini Ultra, for instance, already cost near costing $200 million for its training.

And the chart below that an organization named Epoch AI put together shows that the associated fee of coaching has been going up by 2.4 times per 12 months. And there are aspects which will actually speed up that.

As an example, on long context windows, we imagine that’s the feature of Gemini Ultra that made it perhaps so way more expensive (multiple times costlier) than GPT-4. After which there are other techniques equivalent to overtraining the model in order that it’s more efficient at inference time or run time. The purpose is, there’s this enormous amount of capital going into improving the bottom capability, however it’s not clear how differentiated one frontier model is from the subsequent.

Costs rise but prices are dropping quickly

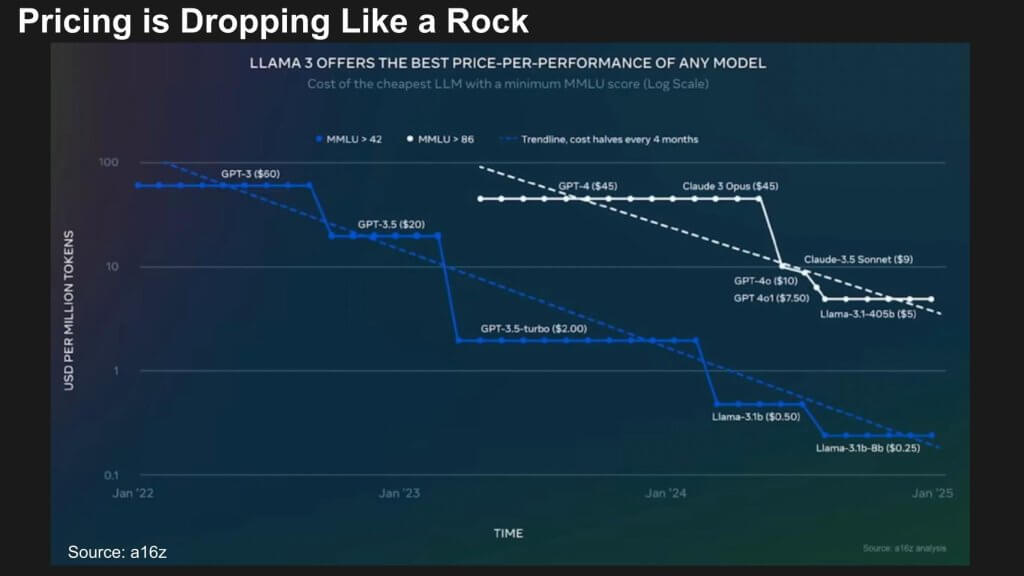

The opposite concerning factor here is that not only are models are getting increasingly expensive to develop and improve, but pricing is dropping like a rock!

The chart above shows prices dropping by 4 orders of magnitude within the span of three years for GPT-3 class models. GPT-4 class models are on pace to drop two orders of magnitude inside 24 months after introduction.

The purpose is, competition drives down prices and organizations can leverage advanced language models more economically, facilitating wider AI integration across sectors. And, as many have predicted, this could lead on to a commoditization of foundational models.

Enter Zuck and Elon with open-source models

On the previous slide, we showed OpenAI and Anthropic and didn’t even include Gemini. Within the latter years, we’ve got Meta with is Llama 3, which as shown within the ETR data is de facto becoming adopted by corporations, including enterprises and independent software vendors, of their products.

Musk stood up an Nvidia H100 training cluster faster and bigger (apparently) than anyone. It’s not completely done. But the purpose is there are more frontier-class competitors pouring into the market. And we already see prices dropping precipitously, so the economics of that business are usually not that attractive.

Other open-source players equivalent to IBM with Granite 3.0, while not attempting to compete with the biggest foundation models, provide support for the thesis around Enterprise AGI, with models that perhaps are smaller but are also sovereign, secure and more targeted at enterprise use cases.

The frontier risks turning right into a commodity business. The models, while more likely to have some differentiation, require a lot resource to constructing that it seems more likely to severely depress the prospect of compelling economic returns.

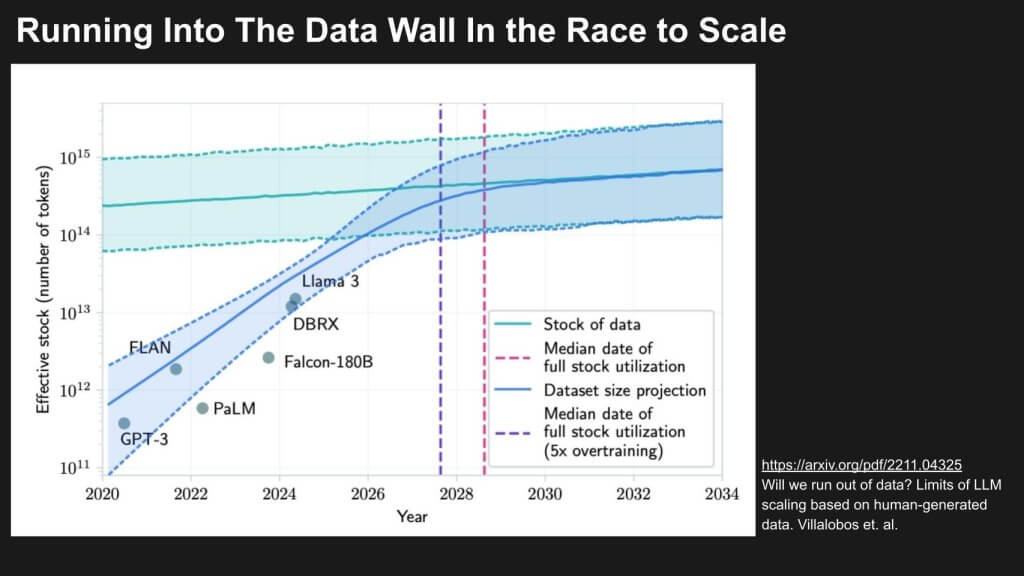

More bad news: LLMs hitting a knowledge wall

Below we show that without recent approaches to data generation or efficiency, scaling up LLMs may soon face diminishing returns, constrained not by compute or parameters but by the provision of high-quality data. Due to the scaling laws, we want to maintain scaling up the training data sets. But we’re running out of high-quality tokens on the general public web.

What about synthetic data generation?

A key solution to the issue is synthetically generated data, but it might probably’t be just any data that a model generates. The info actually has to represent recent knowledge, not a regurgitation of what’s already within the models.

And a technique it might probably do this is with data that may be verified in the true world, equivalent to computer code or math, since it’s the verification process equivalent to running the code that makes it high-quality data – because you may say that is recent. Generating it was a hypothesis, while testing it and validating it make it recent knowledge that’s high-quality. But the problem is regardless that you may do that with code and math, it doesn’t actually make the models knowledgeable in all other domains.

That’s why the models need to steer to learn from human expertise.

What’s the long run of synthetic data?

This query is addressed in comments made out of Alex Wang in a No-Priors conversation. Take a listen after which we’ll come back and comment.

Here’s what Alex Wang needed to say on this topic of synthetic data production:

Data production… will probably be the lifeblood of all the long run of those AI systems. Crazy stuff. But JPMorgan’s proprietary data set is 150 petabytes of knowledge. GPT-4 is trained on lower than 1 petabyte of knowledge. So there’s clearly a lot data that exists inside enterprises and governments that’s proprietary data that may be used for training incredibly powerful AI systems. I believe there’s this key query of what’s the long run of synthetic data and the way synthetic data must emerge? And our perspective is that the critical thing is what we call hybrid human AI data. So how will you construct hybrid human AI systems such that AI is doing quite a lot of the heavy lifting, but human experts and other people, the mainly best and brightest, the neatest people, the perfect at reasoning, can contribute all of their insight and capability to be certain that you produce data that’s of extremely prime quality of high fidelity to ultimately fuel the long run of those models.

Will synthetic data allow FM vendors to interrupt through the info wall?

When Alex mentioned that the perfect and brightest have to contribute their reasoning and insight, highlighting his platform’s capability to assemble data from possibly a whole bunch of hundreds of contributors, it struck a chord of idealism. His vision, as he described, sounded paying homage to Karl Marx’s famous line, “From each in line with his ability, to every in line with his needs.” This comparison might sound exaggerated, however it underscores a utopian vision, akin to the idealism of communism itself.

Nonetheless, the fact is that such precious knowledge and expertise, often the lifeblood of for-profit enterprises, isn’t more likely to be freely extracted just by offering modest compensation. The insights and knowhow embedded inside these corporations are too critical to their competitive edge to be willingly shared for a nominal fee. Jamie Dimon could be silly to permit that to occur, despite his investment in OpenAI.

That is the true data wall.

The enterprise automation opportunity is big but requires specialized knowledge

A premise we’re working from today is that enterprise AGI is a big opportunity for corporations and a lot of the value created will accrue to those firms.

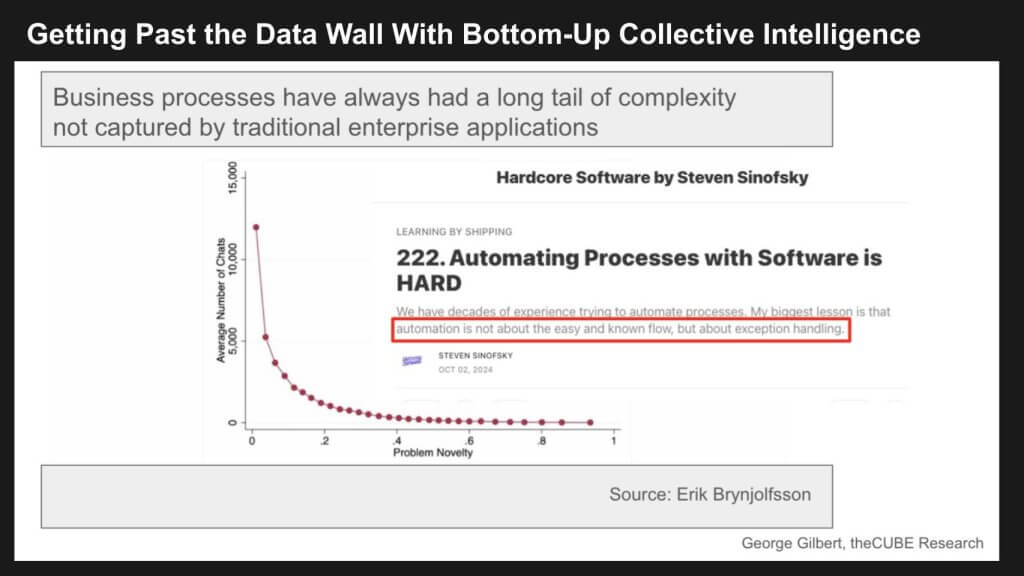

Let’s examine this with a take a look at the Power Law concept that we’ve put forth previously from theCUBE Research and use the graphic below from Erik Brynjolffson.

Automation of complex business processes has been historically so difficult because each process is exclusive and accommodates specific tribal knowledge. Enterprise apps have been limited by way of what they’ll address (equivalent to human resources, finance, customer relationship management and the like). Custom apps have been in a position to move down the curve barely but a lot of the long tail stays un-automated.

As highlighted above in red, Brynjolfsson implies that horizontal enterprise apps are relatively straightforward to create and apply to automate known workflows, but unknown processes require an understanding of exception handling.

Agentic AI has the potential to automate complicated workflows

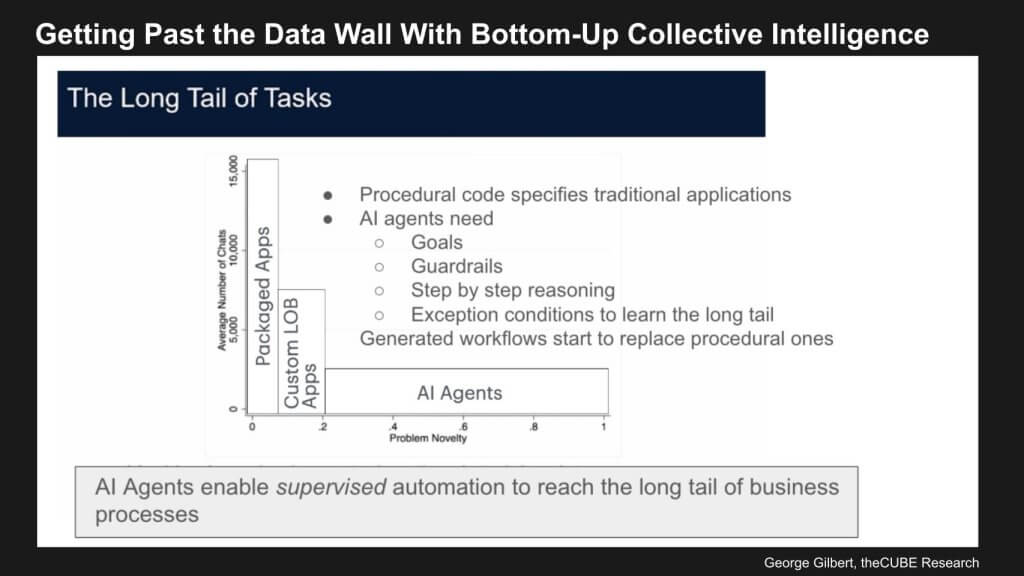

Below we’ve taken Brynjolfsson’s chart and superimposed agentic onto it. What we show is that for a long time, we’ve solved high-volume processes with packaged apps equivalent to finance. Everyone does finance the identical way. HR is one other example. Mid-volume processes were tackled with custom applications.

However the economics of constructing custom applications was difficult since you needed to hand-code the foundations related to each business process. And every business process itself contained essentially a snowflake of variants that were very difficult to capture.

The rationale we couldn’t get to the long tail of processes and the snowflakes inside each process, is that it wasn’t feasible to program the complex workflow rules. So we see a future where agents can change the economics of achieving that by observing and learning from human actions.

First, citizen developers give agents goals and guardrails. But just as essential, agents can generate plans with step-by-step reasoning that humans can then edit and iterate. Then the exception conditions change into learnable moments to assist get the agent further down the long tail the subsequent time.

Agents learning from humans

We’re going as an example this and walk you thru a demo that underscores an example of how agents can learn from human reasoning traces. Specifically we’ll walk through a demo that explains: 1) The technology to learn from human actions is definitely in market today; 2) That agents can learn from human reasoning traces on exceptions; and three) There are still big gaps to be filled and which are fundamental to enterprise AGI becoming a reality.

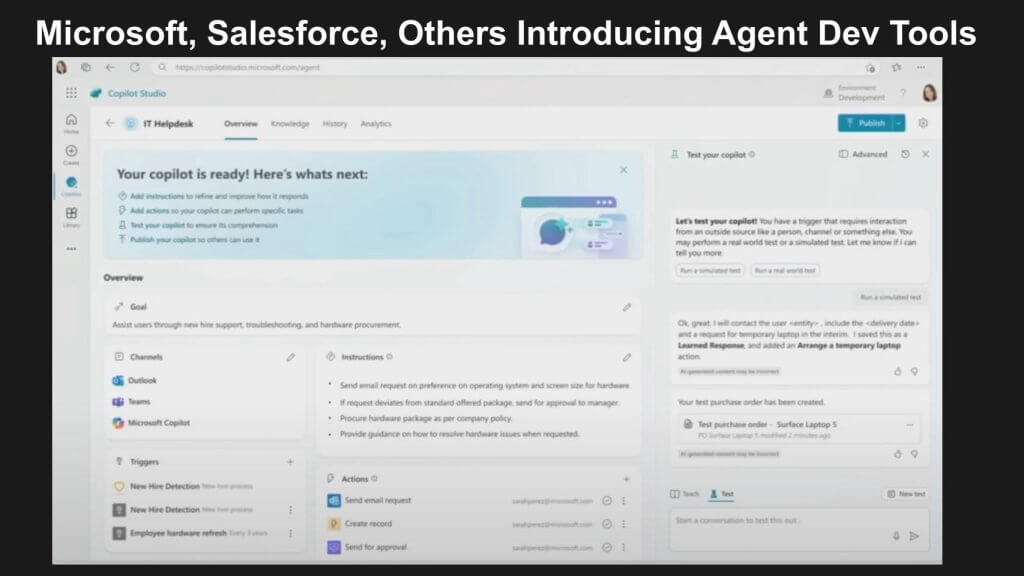

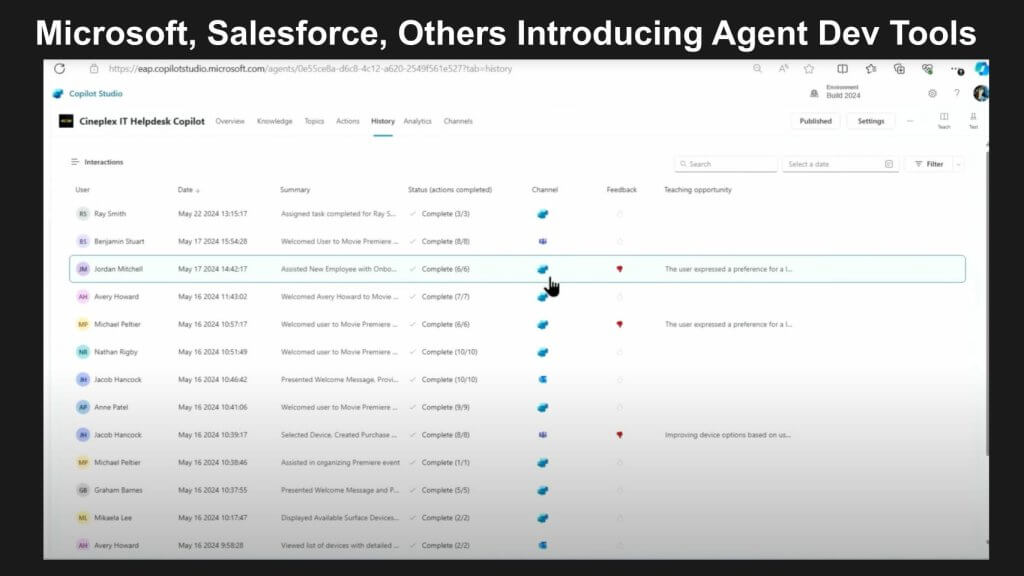

The primary slide of the demo below shows a screenshot from Microsoft’s Copilot Studio.

This capability is shipping today from corporations equivalent to Microsoft, Salesforce Inc. and others. The important thing point is that agents can learn from their human supervisors. We’re taking a look at a screen above that’s defining an agent that onboards an worker. It doesn’t take AGI, it doesn’t even must be fully gen AI business logic. It starts by leveraging existing workflows and determining the right way to orchestrate the long tail of activities by combining and recombining those existing constructing blocks.

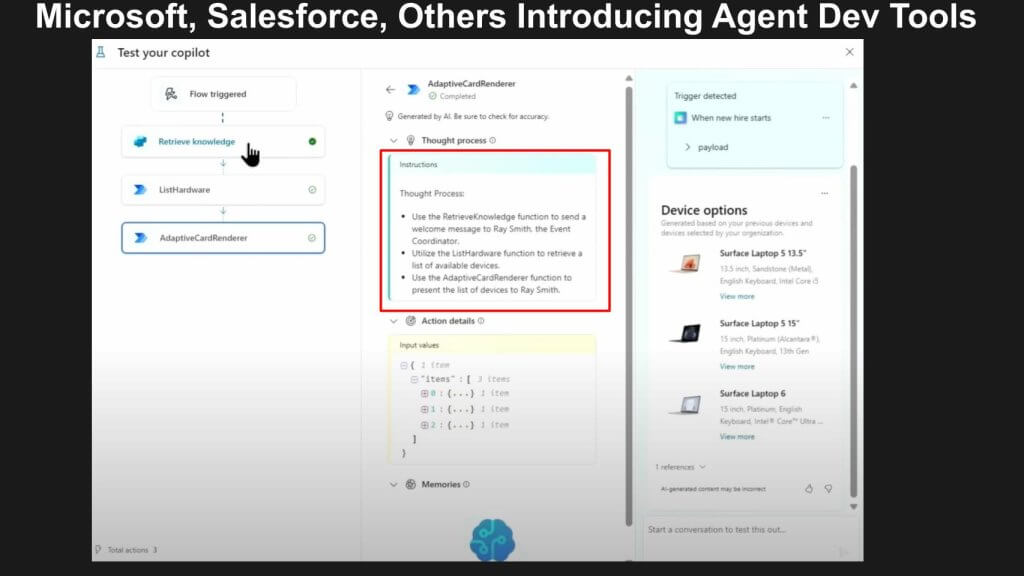

An agent’s thought process in motion

An agent’s real power is that they learn from exceptions while in production. That is the other of traditional software where you must catch and suppress bugs before you go into production. Here, essentially you can take into consideration a bug as a learnable moment.

Below this particular agent is onboarding a brand new worker and providing laptop options. The agent is showing you its thought process in the middle pane (highlighted in red).

This just isn’t actually what you’d see at runtime, but in this instance the agent is offering the laptop. The worker works in events and this particular worker needs a tablet, not a laptop. So the agent logs or captures a teachable moment that extends what the agent can do without going back to a human supervisor once it learns. And every teachable moment then extends the agent’s ability to maneuver down that power law curve and handle more edge cases.

An agent learns from an exception

Below we show an exception where the user wants something different (a tablet) than what’s offered on the usual menu. The machine can’t figure it out and the human must be brought into the loop. The agent then learns from the human reasoning and the actions that the human took to satisfy the subsequent step in the method. That motion becomes knowledge embedded into this organic system.

It’s price declaring that each row you see above is an instance of the agent having run its onboarding process. The little red mark on the row where the hand cursor points indicates an exception condition. And that was where the human who was being onboarded said, “the alternatives you’re giving me aren’t ok.”

How the agentic system learns and evolves

Below we show the feedback loop and the way the agent learns and the system becomes more intelligent. On the proper side is the thought process. There’s slightly comment that claims negative feedback, the system responds accordingly, then the agent knows that the user had that negative feedback. So it creates a potentially learnable step and it expands the range of tablet options without going through all of the screens. And now, when an event planner is onboarded, the system adds tablets to the menu.

Advancing seven a long time of automation

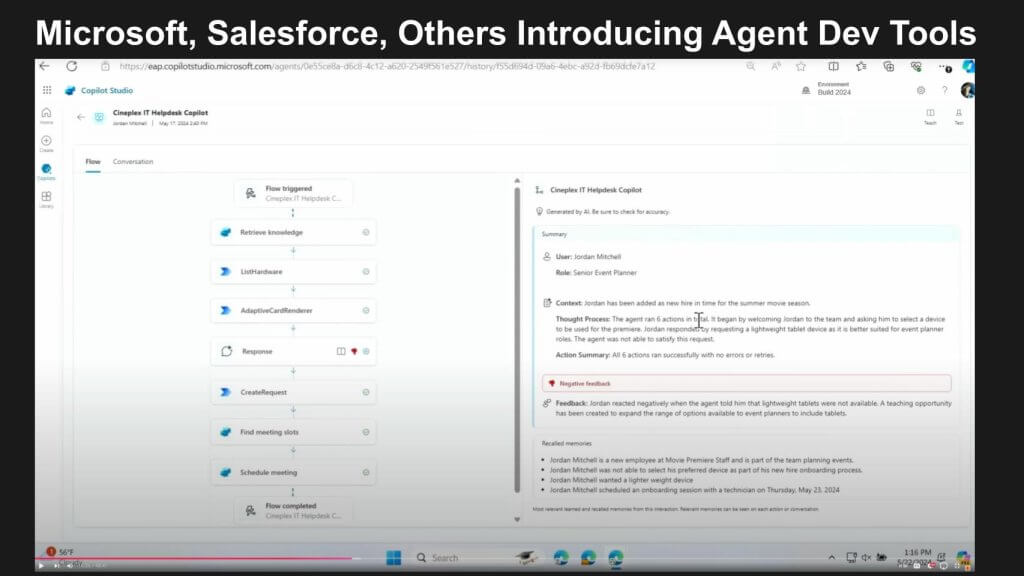

Let’s take a step back and put this into the context over the history of the knowledge technology industry and automation specifically. Computing initially automated back office functions, after which it evolved into notebook computer productivity. Within the tech industry we prefer to speak about a series of waves where each subsequent wave abstracts the previous generation’s complexity away.

It’s price declaring the above chart is from Carlota Perez’s famous book “Technological, Revolutions and Financial Capital.” Once you undergo a wave toward the mature end of the wave, it’s the people constructing on the technology who reap the advantages. And that’s the last word message we’re really saying here: The muse models are enormously capital-intensive and talent-intensive, but all the info and knowhow that turns data into business value lies locked up contained in the enterprises that are usually not going to be feeding the frontier model corporations. In other words, it’s the enterprises which are going to get the massive advantages.

Foundation models are enormously capital intensive and talent intensive, but all the info and knowhow that turns data into business value lies locked up contained in the enterprises that are usually not going to be feeding the frontier model corporations. In other words, it’s the enterprises which are going to get the massive advantages.

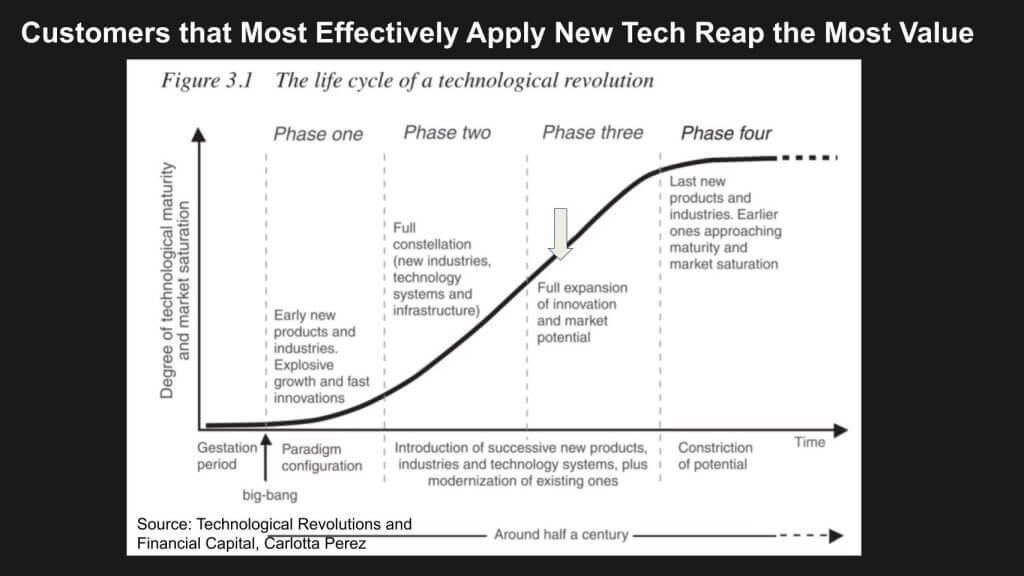

Emerging technology layers are a missing ingredient

Below we show the evolving technology stack and the impact AI could have on the vision of enterprise AGI. The continuing evolution of technology infrastructure represents a shift toward a dynamic, interconnected framework that allows a real-time digital representation of a corporation.

As enterprises increasingly depend on an ecosystem of cloud-based services — infrastructure-as-a-service or IaaS, platform-as-a-service or PaaS, and software-as-a-service or SaaS — technology becomes the core enabler of contemporary business models. This integration supports an environment where data resides all over the place and is accessible, governed and trusted. Emerging capabilities, equivalent to digital twins, present the vision of a unified, organic system that mirrors the people, processes and resources inside an enterprise, forming a shared and actionable source of truth.

For the collective intelligence of 100,000 agents to work, they need a shared source of truth. The green blocks below represent the info harmonization layer that we talk about so often. And the agent control framework we’ve discussed in previous Breaking Evaluation episodes. It also shows a brand new analytics layer that may interpret top-down goals and deliver outcomes based on desired organizational metrics.

Nonetheless, achieving this nirvana state requires a transformative approach to data and process management. Historically, microservices architectures fostered rapid evolution but led to isolated sources of truth, creating challenges in synthesizing enterprise data into coherent insights. Today, as organizations seek to align human and AI agents in a collaborative framework, there may be a pressing need for a central source of truth — one which transcends traditional databases and orchestrates processes across the enterprise. This unified framework mustn’t only align with strategic objectives equivalent to profitability, growth and sustainability, but in addition be able to managing and analyzing the complexities of business processes in real time.

The following generation of enterprise software, due to this fact, must deliver greater than transactional efficiency; it must support a process-centric approach, bridging the disparate data streams from operational, analytic and historical systems. This agent-based framework, underpinned by advanced analytics, will redefine traditional business intelligence.

Though there are numerous emerging players exploring this space with distinct approaches — starting from relational AI to process-mining, data and application vendors equivalent to Celonis SE, UiPath Inc., Salesforce, Microsoft and Palantir Technologies Inc. — their varied strategies highlight the complexity and opportunity inside this recent paradigm.

In our view, because the industry further develops these capabilities, understanding the nuances of every approach will probably be critical to constructing applications that may capitalize on this integrated, process-driven source of truth. The long run presents each challenges and significant opportunities, setting the stage for a brand new era in enterprise applications, data orchestration and process alignment.

Tell us what do you’re thinking that. Do you think that the huge investments going to foundation models are misguided in a quest for idiot’s gold? Or do you think one model will rule all of them, including enterprise AGI? What do you consider this idea of Enterprise AGI? Will FM vendors equivalent to OpenAI ultimately develop a business model that dominates and captures a lot of the value? Or do you think enterprises will leverage the a whole bunch of billions of dollars pouring into AI and gen AI for their very own proprietary profit?

Tell us.

Image: theCUBE Research/DALL-E

Disclaimer: All statements made regarding corporations or securities are strictly beliefs, points of view and opinions held by SiliconANGLE Media, Enterprise Technology Research, other guests on theCUBE and guest writers. Such statements are usually not recommendations by these individuals to purchase, sell or hold any security. The content presented doesn’t constitute investment advice and mustn’t be used as the premise for any investment decision. You and only you might be chargeable for your investment decisions.

Disclosure: Most of the corporations cited in Breaking Evaluation are sponsors of theCUBE and/or clients of Wikibon. None of those firms or other corporations have any editorial control over or advanced viewing of what’s published in Breaking Evaluation.

Your vote of support is significant to us and it helps us keep the content FREE.

One click below supports our mission to offer free, deep, and relevant content.

Join our community on YouTube

Join the community that features greater than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and lots of more luminaries and experts.

THANK YOU