Google LLC today announced it’s bringing its custom Ironwood chips online for cloud customers, unleashing tensor processing units that may scale as much as 9,216 chips in a single pod to change into the corporate’s strongest AI accelerator architecture thus far.

The brand new chips can be available to customers in the approaching weeks, alongside recent Arm-based Axion instances that promise as much as twice the price-performance of current x86-based alternatives.

Google’s own frontier models, including Gemini, Veo and Imagen, are trained and deployed using TPUs, alongside equally sizable third-party models reminiscent of Anthropic PBC’s Claude. The corporate said the appearance of AI agents, which require deep reasoning and advanced task management, is defining a brand new era where inference — the runtime intelligence of energetic models — has greatly increased the demand for AI compute.

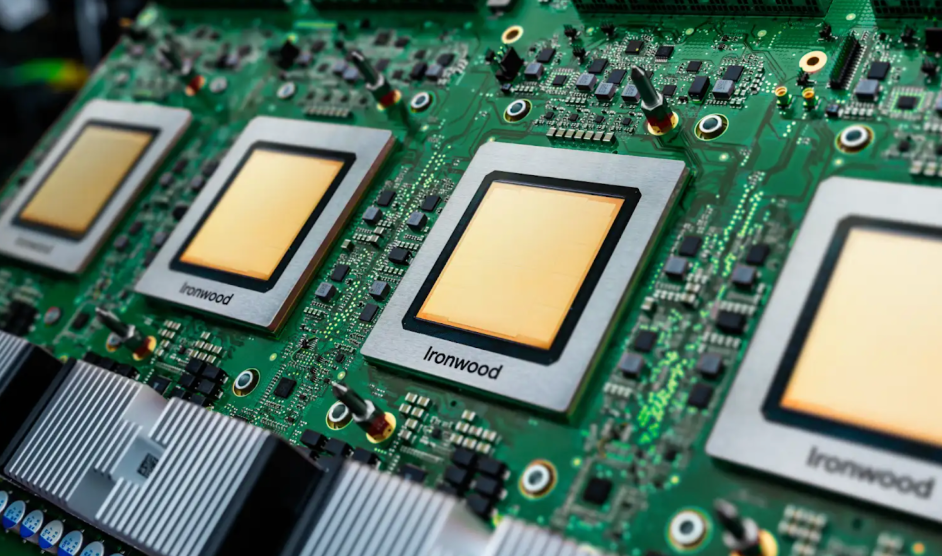

Ironwood: Google’s AI powerhouse chip

The tech giant debuted Ironwood at Google Cloud Next 2025 in April and touted it as essentially the most powerful TPU accelerator the corporate has ever built.

The following-generation architecture allows the corporate to scale as much as 9,216 chips in a single server pod, linked along with inter-chip interconnect to offer as much as 9.6 terabits per second of bandwidth. They might be connected to a colossal 1.77 petabytes of shared high-bandwidth or HBM memory.

Inter-chip interconnect, or ICI, acts as a “data highway” for chips, allowing them to think and act as a single AI accelerator brain. This is essential because modern-day AI models require significant processing power, but they will’t fit on single chips and should be split up across tons of or hundreds of processors for parallel processing. Similar to hundreds of buildings crammed together in a city, the most important problem this sort of system faces is traffic congestion. With more bandwidth, they will talk faster and with less delay.

HBM maintains the vast amount of real-time data AI models must “remember” when training or processing queries from users. In accordance with Google, the 1.77 petabytes of accessible data in a single, unified system is industry-leading. A single petabyte, or 1,000 terabytes, can represent around 40,000 high-definition Blu-ray movies or the text of hundreds of thousands of books. Making all of this accessible directly lets AI models respond immediately and intelligently with enormous amounts of information.

The corporate said the brand new Ironwood-based pod architecture can deliver greater than 118x more FP8 ExaFLOPS than the closest competitor and 4x higher performance for training and inference than Trillium, the previous generation of TPU.

Google included a brand new software layer on top of this advanced hardware co-designed to maximise Ironwood’s capabilities and memory. This features a recent Cluster Director capability in Google Kubernetes Engine, which enables advanced maintenance and topology awareness for higher process scheduling.

For pretraining and post-training, the corporate announced enhancements to MaxText, a high-performance, open source large language model training framework for implementing reinforced learning techniques. Google also recently announced upgrades to vLLM to support inference switching between GPUs and TPUs, or a hybrid approach.

Anthropic, an early user of Ironwood, said that the chips provided impressive price-performance gains, allowing them to serve massive Claude models at scale. The leading AI model developer and provider announced late last month that it plans to access as much as 1 million TPUs.

“Our customers, from Fortune 500 firms to startups, rely upon Claude for his or her most important work,” Anthropic’s Head of Compute James Bradbury said. “As demand continues to grow exponentially, we’re increasing our compute resources as we push the boundaries of AI research and product development.”

Axion expands with N4A and C4A metal instances

Google also announced the expansion of its Axion offerings with two recent services in preview: N4A, its second-generation Axion virtual machines, and C4A metal, the corporate’s first Arm Ltd.-based bare-metal instances.

Axion is the corporate’s custom Arm-based central processing unit, designed to offer energy-efficient performance for general-purpose workloads. Google executives noted that the important thing to Axion’s design philosophy is its compatibility with the corporate’s workload-optimized infrastructure strategy. It uses Arm’s expertise in efficient CPU design to deliver significant performance and power use enhancements over traditional x86 processors.

“The Axion processors may have 30% higher performance than the fastest Arm processors available within the cloud today,” Mark Lohmeyer, vp and general manager of AI and computing infrastructure at Google Cloud, said in an exclusive broadcast on theCUBE, SiliconANGLE Media’s livestreaming studio, during Google Cloud Next 2024. “They’ll have 50% higher performance than comparable x86 generation processors and 60% higher energy efficiency than comparable x86-based instances.”

Axion provides greatly increased efficiency for contemporary general-purpose AI workflows and it will possibly be coupled with the brand new specialized Ironwood accelerators to handle complex model serving. The brand new Axion instances are designed to offer operational backbone, reminiscent of high-volume data preparation, ingestion, analytics and running the virtual services that host intelligent applications.

N4A instances support as much as 64 virtual CPUs and 512 gigabytes of DDR5 memory, with support for custom machine types. The brand new C4A metal delivers dedicated physical servers with as much as 96 vCPUs and 768 gigabytes of memory. These two recent services join the corporate’s previously announced C4A instances designed for consistent high performance.

Photo: Google

Support our mission to maintain content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with greater than 11,400 tech and business leaders shaping the longer term through a singular trusted-based network.

About SiliconANGLE Media

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our recent proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to assist technology firms make data-driven decisions and stay on the forefront of industry conversations.