When Dun & Bradstreet Holdings Inc. got down to construct a collection of analytical capabilities anchored in artificial intelligence three years ago, it confronted an issue that has develop into common across the enterprise AI landscape: find out how to scale up AI workflows without sacrificing trust within the underlying data.

Trust wasn’t a negotiable issue. The corporate’s Data Universal Numbering System, which is the equivalent of a Social Security number for businesses, was already embedded in credit decisioning, compliance, lending and supplier qualification workflows at greater than 200,000 customers, including about 90% of the Fortune 500.

Introducing agentic AI created recent challenges around transparency, lineage and recoverability that demanded additional safeguards, said Gary Kotovets, D&B’s chief data and analytics officer. “The essence of our business is trust,” he said. “Once we began making that data available through AI and agents, we needed to be certain the identical level of trust carried through.”

Over the course of two years, D&B built a multilayered data resilience framework that features consistent backup and retention policies, model version controls, confidence scoring and integrity monitoring to detect anomalies and artificial outputs. It also expanded its governance layer to stop data leakage and implement strict rules around who had access to data.

D&B’s Kotovets: “The essence of our business is trust.” Photo: D&B

“We began with [a set of governance standards] that we thought covered every thing, but we’ve been adding to them over the past two or three years,” Kotovets said. By the point D&B.AI launched, trust was greater than a marketing message; it was a measurable property of the system.

D&B’s experience highlights the pains organizations must take to be sure that AI normally, and agentic AI specifically, can deliver consistently trustworthy results. Recent research suggests that many firms are removed from reaching that goal.

As they race to satisfy board-level demands to place AI to work, the muse of knowledge resilience that supports reliable AI model performance is commonly neglected. That’s creating recent cybersecurity vulnerabilities and will slow long-term AI adoption if trust erodes.

Security disconnect

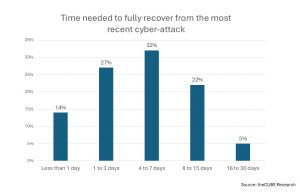

A brand new study by TheCUBE Research found that although most organizations rate their performance as strong against the highly regarded National Institute of Standards and Technology Cybersecurity Framework, only 12% said they will get better all their data after an attack, and 34% experienced data losses exceeding 30% previously yr.

These gaps in data resilience — or the power to guard, maintain and get better data from disruptions -– are being magnified by the data-hungry nature of AI models. Organizations desirous to derive insights from years of largely inaccessible data are constructing massive repositories of unstructured information without paying adequate attention to security, access control, backup and classification, many experts say. The black-box nature of AI models makes poorly governed data a trigger for misinformation, exposure and tampering.

These gaps in data resilience — or the power to guard, maintain and get better data from disruptions -– are being magnified by the data-hungry nature of AI models. Organizations desirous to derive insights from years of largely inaccessible data are constructing massive repositories of unstructured information without paying adequate attention to security, access control, backup and classification, many experts say. The black-box nature of AI models makes poorly governed data a trigger for misinformation, exposure and tampering.

“How are you going to do agentic AI when your basics are such a large number?” asked Christophe Bertrand, principal analyst for cyber resiliency, data protection and data management at theCUBE Research.

Although there’s broad agreement that AI demands high-quality, well-governed data, the research indicates that AI inference data is commonly poorly governed, inadequately classified and infrequently backed up. Just 11% of respondents back up greater than 75% of their AI data, and 54% back up lower than 40%, in keeping with theCUBE Research.

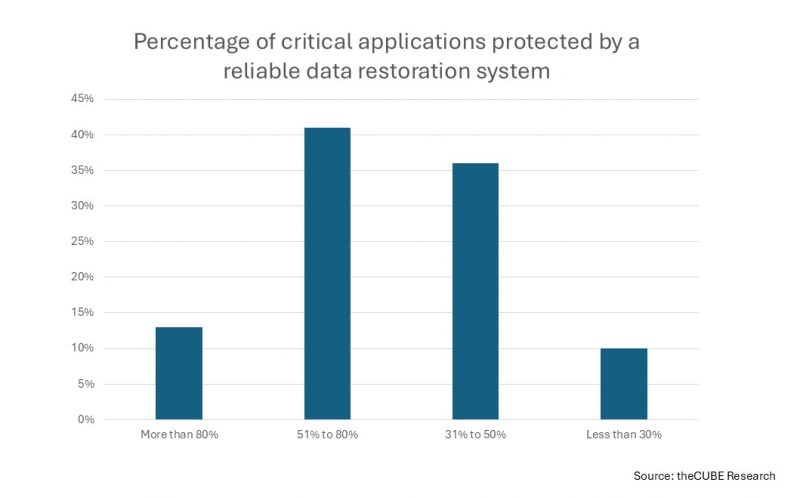

Forty-eight percent said lower than half of their critical applications are protected by a comprehensive data restoration solution, while only 4% said greater than 90% of their critical applications are fully protected.

Agentic amplifier

The risks of poor data resilience might be magnified as agentic AI enters the mainstream. Whereas generative AI applications reply to a prompt with a solution in the identical manner as a search engine, agentic systems are woven into production workflows, with models calling one another, exchanging data, triggering actions and propagating decisions across networks. Erroneous data will be amplified or corrupted because it moves between agents, just like the party game “telephone.”

Countly’s Soner: “AI amplifies weak data pipelines” Photo: LinkedIn

Other studies have uncovered similar confidence gaps. Deloitte LLP’s recent State of AI within the Enterprise survey of greater than 3,000 business and data technology leaders found that while 74% expect to make use of agentic AI inside two years, only 21% have mature governance practices in place for autonomous agents.

A survey of three,500 IT and business leaders last fall by trust management platform Vanta Inc. found that though 79% are using or planning to make use of AI agents to guard against cyberattacks, 65% said their planned usage outpaces their understanding of the technology.

A Gartner Inc. report last month asserted that at the same time as executives and chief information security officers “all claim to value cyber resilience, organizations chronically underinvest in it resulting from each organizational inertia and an outdated zero-tolerance-for-failure mindset.” Gartner said organizations fare worst within the critical response and recovery stages of the NIST framework.

This all adds as much as a looming trust problem. Boards and chief information officers agree that artificial intelligence can’t be implemented at scale without high-quality, resilient data. Yet in lots of organizations, the inference data used to feed AI engines is poorly governed, inconsistently classified and infrequently backed up. That makes all of it but unimaginable to confirm how decisions are made or to replay and unwind downstream effects.

The barrier to enterprise AI adoption may ultimately be less about model accuracy or the provision of processing power than the power to ensure the integrity, lineage and recoverability of the info that AI depends upon.

“AI doesn’t expose weak data pipelines,” said Onur Alp Soner, chief executive of analytics firm Countly Ltd. “It amplifies them.”

Compliance ≠ resilience

Experts cite quite a few reasons data protection gets short shrift in lots of organizations. A key one is a very intense give attention to compliance on the expense of operational excellence. That’s the difference between meeting a set of formal cybersecurity metrics and having the ability to survive real-world disruption.

Compliance guidelines specify policies, controls and audits, while resilience is about operational survivability, akin to maintaining data integrity, recovering full business operations, replaying or rolling back actions and containing the blast radius when systems fail or are attacked.

Info-Tech’s Avakian: Checkbox compliance creates a false sense of confidence. Photo: LinkedIn

Organizations are inclined to conflate the 2, but having plans in place is different from testing them in real-world conditions. “They’ll have a look at NIST as a control framework and say, ‘OK, we have now a policy for this,’” said Erik Avakian, technical counselor at Info-Tech Research Group Inc. and the Commonwealth of Pennsylvania’s former CISO. “They could have a policy, but they haven’t measured it.”

Checkbox compliance creates a “false sense of confidence,” he said. “Have we actually gone in and tested those things? And do they work? Some frameworks are a self-assessment without proof of implementation,” he said, allowing CISOs to effectively grade their very own work.

One other factor is cybersecurity’s traditional give attention to stopping intrusions quite than containing damage. That strategy has develop into “prohibitively expensive and impractical,” Gartner noted. Its researchers as a substitute recommend, “a brand new way of pondering that prioritizes cyber resilience and mitigates harm brought on by inevitable breaches.”

Another person’s problem

Organizational aspects also create vulnerabilities. Data protection often lives inside the chance management function, which is separate from cybersecurity. Security pros will be lulled right into a false sense of complacency by the idea that another person is caring for the info.

“Resilience and compliance-oriented security are handled by different teams inside enterprises, resulting in a scarcity of coordination,” said Forrester’s Ellis. “There’s a disconnect between how prepared people think they’re and the way prepared they really are.”

Forrester’s Ellis: “There’s a disconnect between how prepared people think they’re and the way prepared they really are.” Photo: SiliconANGLE

Then there are the technical aspects. AI models behave fundamentally otherwise from traditional software, introducing complexity that conventional data protection measures don’t fully address.

Conventional software is deterministic, meaning it follows predefined rules that make sure the same input at all times yields the identical output. AI models are probabilistic. They use statistical or learned estimation processes to infer plausible outputs from patterns within the training data.

“With interpretive or generative AI, you’re asking the engine to begin pondering, and that causes it to spread its wings and stitch together internal and external data sources in ways in which were never done before,” said Ashish Nadkarni, group vp and general manager, worldwide infrastructure research at International Data Corp.

There’s no guarantee that a probabilitistc model will produce the identical results each time. Whereas deterministic systems fail loudly by generating errors, AI systems fail silently by delivering confident but mistaken outputs.

Off the mark

Missing or corrupted data can lead models to make decisions or recommendations that appear plausible but are far off the mark. In large language models, these errors manifest as “hallucinations,” which occur as much as 20% of the time in lots of the most well-liked chat engines despite years of research geared toward minimizing them.

Mistakes are still frustratingly common. A survey of 500 U.S. executives conducted last month by document processing platform maker Parseur Pte. Ltd. found that while 88% said they’re very or somewhat confident within the accuracy of the info feeding their analytics and AI systems, the identical 88% report discovering errors in document-derived data at the very least sometimes, with 69% saying mistakes occur often or fairly often.

Mistakes are still frustratingly common. A survey of 500 U.S. executives conducted last month by document processing platform maker Parseur Pte. Ltd. found that while 88% said they’re very or somewhat confident within the accuracy of the info feeding their analytics and AI systems, the identical 88% report discovering errors in document-derived data at the very least sometimes, with 69% saying mistakes occur often or fairly often.

Agentic networks can magnify errors exponentially. “A single upstream data issue becomes a cascading failure,” said Countly’s Soner. “Without clear lineage and integrity guarantees, it becomes unimaginable to inform whether the model was mistaken, the info was mistaken, or the system state itself was inconsistent.”

More on data resilience and AI:

Organizations must treat data resilience as a core AI service layerl.

AI-driven predictive analytics can safeguard enterprise storage against escalating security threats.

How AI-driven automation and managed detection services are transforming cyber resiliency

Attacks will succeed; the perfect strategy is to guard backups and use assessments to guide data resilience improvements.

There are methods to audit model performance to guard against anomalies, but they require examining input data and tracking the model’s reasoning process. Lost or compromised data could make such troubleshooting unimaginable.

“A change to 1 library deep within the stack can don’t have any impact, or it may well mean you’re suddenly getting completely different answers, regardless that nothing appears to have modified,” said Miriam Friedel, vp of machine learning engineering at Capital One Financial Corp. Observability, logging and automatic scanning support the style of forensic evaluation needed to diagnose such problems, she said.

Data overload

AI also introduces recent data classes. Training data provides the real-world context that complex models learn from. It’s typically unstructured, and the volumes will be very large, making classification and traditional data protection measures difficult to implement. It’s tempting to take a “kitchen sink” approach to training data, loading every thing into the model and letting it sort things out.

Capital One’s Freidel: You’ll be able to get “completely different answers, regardless that nothing appears to have modified.” Photo: X

But that will be an invite to a cybersecurity disaster. “If you could have a database, what’s in that database. If you could have an [enterprise resource planning] application, it’s only going to fetch data that’s relevant to it,” said IDC’s Nadkarni. “With AI, there’s a level of sprawl inside and outdoors of the corporate. People don’t often fully understand how massive that sprawl is and what sort of bad actors is perhaps at play attempting to compromise the info.”

Prompts and inference data have to be captured in context logs, which document the knowledge the model had on the time it made a choice. These records are critical where safety, accountability and recoverability matter.

Inference data is what models use to make decisions. It poses unique challenges because securing it while in use in third-party or cloud environments is difficult. Inference data is critical since it fuels continuous training, will be exposed externally and should trigger automated workflows. Despite these risks, many organizations don’t go to the difficulty of classifying inference data, making it hard to guard.

“Inference outputs are rarely treated as first-class data,” said Countly’s Soner. “Replay becomes unimaginable once actions have been triggered. AI-generated data must be governed in the identical way as source data, not treated as logs or exhaust.”

“There’s a scarcity of appreciation for generated data since it summarizes quite a lot of input data,” said Gagan Gulati, senior vp and general manager of knowledge services at NetApp Inc. “Primary data typically has rules and regulations around its use. Generated data typically has none.”

That wouldn’t be an issue if generated data simply vanished into the ether, but it surely has a way of sticking around. Without controls in place, AI models can remember prior interactions and incorporate them into their short-term memory. This recursive output can magnify errors and introduce recent vulnerabilities.

PII problem

For instance, users who include personally identifiable information in prompts can see that data come back in responses days later. Without proper guardrails, prompt data may even develop into a part of a model’s training set and re-emerge in unpredictable ways.

NetApp’s Gulati: “Primary data typically has rules around its use. Generated data typically has none.” Photo: SiliconANGLE

AI also presents recent challenges in managing data access, a critical component of resilience. Organizations don’t wish to train models on production data, in order that they make copies. “That’s a knowledge lineage problem,” said NetApp’s Gulati. “The information set is leaving the perimeter, but all the identical protection rules must apply.”

Agents present recent challenges in data classification and access controls, said David Lee, field chief technology officer at identity management firm Saviynt Inc. Weak entitlements, overly broad permissions and abandoned accounts undermine data resilience because all AI systems ultimately reach data through the identical identity constructs as people.

Organizations need fine-grained authorization layers to make sure agents don’t access sensitive information they don’t need, he said. AI is so recent, though, that such controls often aren’t in place.

In line with Saviynt’s recent AI Risk Report, 71% of 235 security leaders said AI tools already access core operational systems but just 16% consider they govern that access effectively. Greater than 90% said they lack full visibility into AI identities or could detect or contain misuse if it happened.

“The complicated part is when you could have a delegation model where an AI agent calls sub-agents which have their very own permissions,” Lee said. “It becomes almost unimaginable to see what’s connected to what, what privileges are in place and who’s granting what access.”

Agents’ ability to derive recent kinds of data by combining multiple sources presents problems with data classification, a essential component of access management.

“Let’s say I’ve got data classified as confidential and other data classified as personally identifiable information,” Lee said. “My agent creates a report query by putting that data together. It’s now give you recent data. How does that get classified?”

Classification is a slow and arduous task that many businesses abandoned or simplified years ago, but Lee sees the discipline staging a return. “We’re going to want a three-tiered approach around what the info is, who has the best to see it, and what they wish to do with it,” he said. “Today’s systems weren’t designed for that.”

Preparing for AI

These aspects collectively underscore the necessity for organizations to prioritize data resilience as they move AI models from pilots into production. By most accounts, they’ve an extended method to go. A recent survey of 200 people in command of AI initiatives at software providers and enterprises by CData Software Inc. found that only 6% said their data infrastructure is fully ready for AI.

Saviynt’s Lee: Agent delegation could make it “unimaginable to see what’s connected to what.” Photo: LinkedIn

“AI stays a black box for a lot of enterprises, and that could be a primary reason executives hesitate to trust it,” said Daniel Shugrue, product director at software delivery platform firm Digital.ai Software Inc. “Enterprises aren’t blocked from scaling AI due to models; they’re blocked because they don’t trust the systems that feed, transform and act on the info on which AI depends.”

Data resilience experts advocate for what they call AI-grade recoverability. That encompasses knowing what data was used, what state the model was in on the time and confidence that the method will be replayed or rolled back.

“A part of resiliency is that when things break, you’ll be able to understand and track down quickly where the breakage is and find out how to fix it,” said Capital One’s Friedel.

Ensuring data resilience within the AI age requires recent tools and skill sets:

- Immutable event logs are everlasting, tamper-evident records of system events that ensure every decision and data change will be traced and audited.

- Versioned schemas provide structured data definitions that will be tracked over time.

- End-to-end lineage evaluation shows where data originated, the way it was transformed and the way it influenced model outputs.

- Replayable pipelines enable deterministic process re-execution for reproducing model decisions.

- Blast radius isolation accommodates the impact of erroneous outputs or actions so failures don’t cascade.

- Tested rollback procedures are documented methods for reverting models, data or system state to a known-good point without business disruption.

- Data retention policies be sure that redundant, and obsolete and trivial data are deleted quite than archived with the chance of contamination.

The success of enterprise AI ultimately depends less on novel model architectures and more on the unglamorous disciplines of cyber resilience, data protection and operational rigor. Organizations that may guarantee the robustness of the info that fuels their AI models might be in a greater position to scale agentic systems into core business processes. People who can’t will remain mired in pilots and proofs of concept. Investing in resilient data foundations now’s the clearest path to trusted outcomes later.

Image: SiliconANGLE/Google Whisk

Support our mission to maintain content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

- 15M+ viewers of theCUBE videos, powering conversations across AI, cloud, cybersecurity and more

- 11.4k+ theCUBE alumni — Connect with greater than 11,400 tech and business leaders shaping the long run through a novel trusted-based network.

About SiliconANGLE Media

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our recent proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to assist technology firms make data-driven decisions and stay on the forefront of industry conversations.