Synthetic materials are widely used across science, engineering, and industry, but most are designed to perform only a narrow range of tasks. A research team at Penn State set out to vary that. Led by Hongtao Sun, assistant professor of commercial and manufacturing engineering (IME), the group developed a brand new fabrication technique that may produce multifunctional “smart synthetic skin.” These adaptable materials may be programmed to perform a wide range of tasks, including hiding or revealing information, enabling adaptive camouflage, and supporting soft robotic systems.

Using this recent approach, the researchers created a programmable smart skin made out of hydrogel, a soft, water-rich material. Unlike conventional synthetic materials with fixed behaviors, this smart skin may be tuned to reply in multiple ways. Its appearance, mechanical behavior, surface texture, and skill to vary shape can all be adjusted when the fabric is exposed to external triggers reminiscent of heat, solvents, or physical stress.

The findings were published in Nature Communications, where the study was also chosen for Editors’ Highlights.

Inspired by Octopus Skin and Living Systems

Sun, the project’s principal investigator, said the concept was inspired by cephalopods reminiscent of octopuses, which may rapidly alter the look and texture of their skin. These animals use such changes to mix into their surroundings or communicate with each other.

“Cephalopods use a posh system of muscles and nerves to exhibit dynamic control over the looks and texture of their skin,” Sun said. “Inspired by these soft organisms, we developed a 4D-printing system to capture that concept in an artificial, soft material.”

Sun also holds affiliations in biomedical engineering, material science and engineering, and the Materials Research Institute at Penn State. He described the method as 4D printing since the printed objects will not be static. As an alternative, they will actively change in response to environmental conditions.

Printing Digital Instructions Into Material

To attain this adaptability, the team used a technique called halftone-encoded printing. This method converts image or texture data into binary ones and zeros and embeds that information directly into the fabric. The approach is analogous to how dot patterns are utilized in newspapers or photographs to create images.

By encoding these digital patterns throughout the hydrogel, the researchers can program how the smart skin reacts to different stimuli. The printed patterns determine how various regions of the fabric respond. Some areas may swell, shrink, or soften greater than others when exposed to temperature changes, liquids, or mechanical forces. By rigorously designing these patterns, the team can control the fabric’s overall behavior.

“In easy terms, we’re printing instructions into the fabric,” Sun explained. “Those instructions tell the skin how one can react when something changes around it.”

Hiding and Revealing Images on Demand

One of the eye-catching demonstrations involved the fabric’s ability to hide and reveal visual information. Haoqing Yang, a doctoral candidate in IME and the paper’s first creator, said this capability highlights the potential of the smart skin.

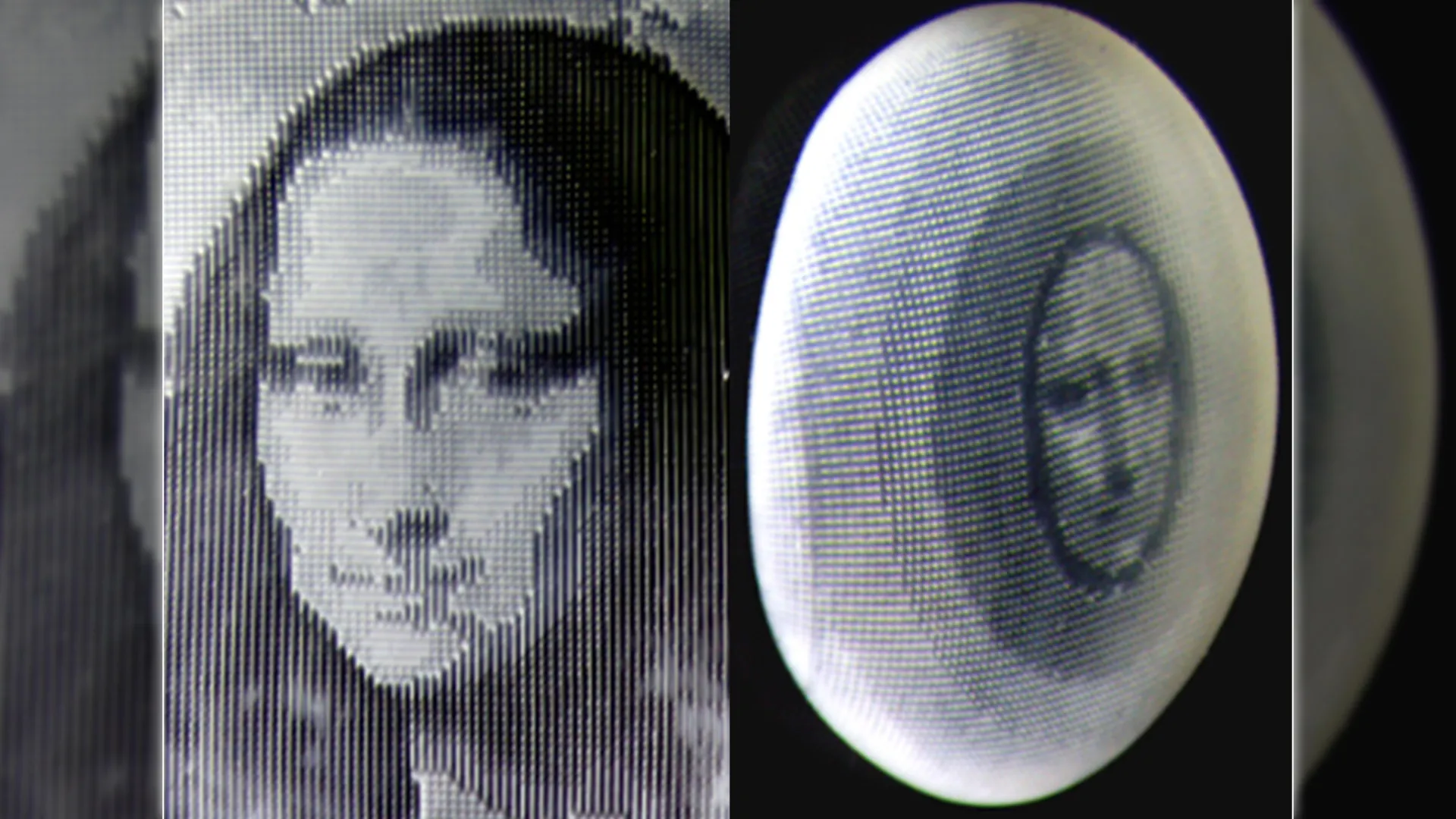

To exhibit the effect, the team encoded a picture of the Mona Lisa into the hydrogel film. When the fabric was washed with ethanol, it appeared transparent and showed no visible image. The hidden image became clear only after the film was placed in ice water or step by step heated.

Yang noted that the Mona Lisa was used only for instance. The printing technique allows virtually any image to be encoded into the hydrogel.

“This behavior could possibly be used for camouflage, where a surface blends into its environment, or for information encryption, where messages are hidden and only revealed under specific conditions,” Yang said.

The researchers also showed that concealed patterns could possibly be detected by gently stretching the fabric and analyzing the way it deforms using digital image correlation evaluation. This implies information may be revealed not only visually, but additionally through mechanical interaction, adding an additional level of security.

Shape Shifting Without Multiple Layers

The smart skin also demonstrated remarkable flexibility. In keeping with Sun, the fabric can easily shift from a flat sheet into complex, bio-inspired shapes with detailed surface textures. Unlike many other shape-changing materials, this transformation doesn’t require multiple layers or different substances.

As an alternative, the changes in shape and texture are controlled entirely by the digitally printed halftone patterns inside a single sheet. This permits the fabric to duplicate effects much like those seen in cephalopod skin.

Constructing on this capability, the team showed that multiple functions may be programmed to work together. By rigorously designing the halftone patterns, they encoded the Mona Lisa image into flat movies that later transformed into three-dimensional forms. Because the sheets curved into dome-like shapes, the hidden image slowly appeared, showing that changes in shape and visual appearance may be coordinated inside one material.

“Just like how cephalopods coordinate body shape and skin patterning, the synthetic smart skin can concurrently control what it looks like and the way it deforms, all inside a single, soft material,” Sun said.

Expanding the Potential of 4D-Printed Hydrogels

Sun said the brand new work builds on earlier research by the team on 4D-printed smart hydrogels, which was also published in Nature Communications. That earlier study focused on combining mechanical properties with programmable transitions from flat to three-dimensional forms. In the present research, the team expanded the approach through the use of halftone-encoded 4D printing to integrate much more functions right into a single hydrogel film.

Looking ahead, the researchers aim to create a scalable and versatile platform that enables precise digital encoding of multiple functions inside one adaptive material.

“This interdisciplinary research on the intersection of advanced manufacturing, intelligent materials and mechanics opens recent opportunities with broad implications for stimulus-responsive systems, biomimetic engineering, advanced encryption technologies, biomedical devices and more,” Sun said.

The study also included Penn State co-authors Haotian Li and Juchen Zhang, each doctoral candidates in IME, and Tengxiao Liu, a lecturer in biomedical engineering. H. Jerry Qi, professor of mechanical engineering at Georgia Institute of Technology, also collaborated on the project.